Introduction to the ALS-U EPICS Environment Training Guide

Welcome

Welcome to the official training documentation for the Advanced Light Source Upgrade (ALS-U) EPICS Environment. This guide serves as the central resource for understanding, installing, using, and maintaining the standardized EPICS software environment specifically tailored for developing and deploying Input/Output Controllers (IOCs) at ALS-U.

This training is hosted on both GitHub Pages and GitLab Pages as part of a set of resources aimed at providing comprehensive guidance for the ALS-U EPICS development workflow.

Purpose of the ALS-U EPICS Environment

The ALS-U EPICS environment is maintained to support the development of robust, consistent, and maintainable IOC applications across the facility. It is typically distributed as a pre-built package (originating from repositories like https://github.com/jeonghanlee/EPICS-env and https://github.com/jeonghanlee/EPICS-env-support) and consists of:

- A specific version of EPICS Base.

- A defined set of EPICS Modules (like Asyn, StreamDevice, Calc, PVXS, etc.) built against that base version.

- Standardized IOC templates and build tools (including scripts like

generate_ioc_structure.bash) to ensure common structure and practices. - Defined procedures for development, testing, deployment, and maintenance.

The primary goals of establishing this environment are to:

- Simplify the development process for IOC engineers.

- Ensure high code quality and reliability.

- Promote consistency across all ALS-U IOC projects, making them easier to understand and manage.

- Reduce tedious and error-prone procedures often associated with manual dependency management and deployment in traditional EPICS development.

Purpose and Scope of This Training Guide

This training guide provides a step-by-step walkthrough of the ALS-U EPICS Environment, covering everything from initial setup to advanced configuration and development techniques. It aims to provide users with the knowledge and skills needed to effectively develop, test, and deploy EPICS IOCs for ALS-U.

Key topics covered include:

- Setting up and verifying the development environment.

- Developing basic and advanced IOC applications using standardized templates and tools.

- Simulating device communication for testing and development.

- Utilizing

iocshscripts and database templates for efficient and scalable configuration. - Understanding the structure and function of key IOC configuration files (

st.cmd,RELEASE,CONFIG_SITE,system.dbd). - Integrating Continuous Integration (CI) practices into the development workflow (details specific to the ALS-U internal GitLab repository).

Target Audience

This training guide is primarily intended for engineers, software developers, and scientists involved in creating, deploying, or maintaining EPICS IOCs for the ALS-U project at LBNL. While its focus is on the ALS-U implementation, much of the content is also applicable and valuable to the broader global EPICS community.

While some sections assume familiarity with EPICS core concepts and Linux environments, the initial chapters are designed to guide users new to this specific ALS-U environment through the setup and basic usage. Later chapters delve into more specific examples and advanced topics.

Training Structure (Chapters)

This guide is organized into chapters designed to lead you through the ALS-U EPICS development process:

-

Chapter 1: Environment Setup and Verification: Focuses on getting the environment operational, covering installation, initial testing, and understanding host architecture concepts like

EPICS_HOST_ARCHand OS-specific directories. -

Chapter 2: First EPICS IOC and GitLab CI: Guides you through creating and expanding your first basic EPICS IOC within the environment and integrating it with GitLab Continuous Integration (CI) pipelines.

-

Chapter 3: Second EPICS IOC and Device Simulation: Builds on basic development by demonstrating how to configure an IOC for device communication and simulating that communication using a TCP-based simulator.

-

Chapter 4: Advanced IOC Configuration and Startup: Delves into more complex configuration techniques, including working with

iocshscripts, managing multiple devices inst.cmd, using database templates and substitution, and understanding the phases of the IOC startup sequence. Includes development of more advanced simulators. -

Chapter 5: Understanding IOC Application Configuration: Provides a deep dive into the critical configuration files that define an IOC’s behavior, such as the style of

st.cmd,configure/RELEASE,configure/CONFIG_SITE, andsystem.dbd.

Note: This structure reflects the current organization of the training guide and may evolve over time.

How to Use This Training Guide

-

New Users: Start with Chapter 1 (Installation, Testing) and proceed through Chapter 2 (First IOC) to build a foundational understanding.

-

Experienced Users: You may jump directly to relevant sections based on your needs, such as advanced configuration (Chapter 4) or detailed file explanations (Chapter 5).

Online Version

The latest official version of this training guide is always available online at the ALS-U internal Gitlab Pages site:

https://jeonglee.pages.als.lbl.gov/epics-trainings/

The latest mirror version of this training guide is always available online at the GitHub Pages site:

https://jeonghanlee.github.io/epics-trainings/

General Prerequisites

Most sections assume you are working in a Linux environment (like Debian 12 or Rocky 8) and have basic familiarity with shell commands, text editors (like nano, vi, emacs), and version control with git. Specific EPICS version requirements are detailed in the installation section.

We hope this training guide serves as a valuable resource for your EPICS development work at ALS-U and at any EPICS facilities as well!

Chapter 1: Environment Setup and Verification

Welcome to the ALS-U EPICS Environment documentation. This first chapter focuses on getting the environment operational. It covers the installation procedure and the essential steps to test your setup.

This chapter covers the following topics:

- Installation: Provides detailed steps to set up the ALS-U EPICS Environment.

- Test Environment: Outlines how to launch and run tests to ensure the environment is functioning correctly after installation.

- Host Architecture and OS-Specific folder: Explains the environment’s approach to host architecture support, focusing on

EPICS_HOST_ARCH, thelinux-x86_64standard, and the role of OS-specific directories.

1.1 EPICS Environment Configuration and Installation

This section covers the essential first steps for setting up the ALS-U EPICS environment locally. You will clone the central environment repository using git from the ALS GitLab server or its GitHub mirror, and then learn how to activate a specific version (e.g., for Debian 12 or Rocky 8) by sourcing the appropriate setEpicsEnv.bash script in your terminal session. Basic verification steps are also included.

Lesson Overview

In this lesson, you will learn how to do the following:

- Clone the ALS-U EPICS environment repository using git

- Configure the ALS-U EPICS environment locally

- Test the cloned EPICS environment with a few EPICS command line tools

- Activate a specific version of the EPICS environment within a terminal session

Get the ALS-U EPICS environment

Clone the ALS-U EPICS repository using Git.

Clone the ALS-U EPICS environment by using git clone

Users may need to have SSH access to the ALS GitLab repository to clone the following repositories.

# Example for the ALS-U Internal Gitlab (Official)

$ git clone --depth 1 ssh://git@git-local.als.lbl.gov:8022/alsu/epics/alsu-epics-environment.git ~/epics

# or

# Example for the Mirror site on GitHub

$ git clone --depth 1 https://github.com/jeonghanlee/EPICS-env-distribution.git ~/epics

By cloning the repository, you have the environment at the ${HOME}/epics folder. In most cases, you are ready to use it.

Configure the ALS-U EPICS enviornment

The ALS-U EPICS environment supports multiple operating system versions and EPICS versions. Please note that the pre-built binaries included in this environment currently target the Linux x86_64 architecture exclusively.

To select and activate a specific environment version in your current terminal session, you need to source the appropriate setEpicsEnv.bash script. The path will include the specific version number, which may change over time.

First, find the available versions by listing the directory contents:

$ ls ~/epics/

Then, use the version you found to source the script for your operating system and desired EPICS version:

# Example for EPICS 7.0.7 on Debian 12 (x86_64)

source ~/epics/1.1.2/debian-12/7.0.7/setEpicsEnv.bash

# or

# Example for EPICS 7.0.7 on Rocky 8.10 (x86_64)

source ~/epics/1.1.2/rocky-8.10/7.0.7/setEpicsEnv.bash

Sourcing the script sets up necessary environment variables like EPICS_BASE, PATH, and LD_LIBRARY_PATH. The output should resemble this (user and specific paths will vary):

Set the EPICS Environment as follows:

THIS Source NAME : setEpicsEnv.bash

THIS Source PATH : /home/jeonglee/epics/1.1.2/debian-12/7.0.7

EPICS_BASE : /home/jeonglee/epics/1.1.2/debian-12/7.0.7/base

EPICS_HOST_ARCH : linux-x86_64 # <-- Note the architecture

EPICS_MODULES : /home/jeonglee/epics/1.1.2/debian-12/7.0.7/modules

PATH : ......................

LD_LIBRARY_PATH : ......................

Enjoy Everlasting EPICS!

Note how the EPICS_HOST_ARCH variable and the paths explicitly mention linux-x86_64.

Testing the Environment

Once the environment is sourced, verify that the EPICS command-line tools are accessible in your PATH:

# Check help output for an EPICS command-line tool (e.g., caput)

$ caput -h

# Verify the location of an EPICS command-line tool (e.g., caget)

$ which caget

If these commands run successfully and show help/path information, you have successfully configured the ALS-U EPICS environment in your current terminal session.

Alternative Environment for Other Linux Distributions (Training Contingency)

This training and ALS-U Controls officially supports Debian 12 and Rocky 8.10 on the Linux x86_64 architecture, using the primary ALS-U EPICS environment repository cloned into ~/epics as described in the main [Get the ALS-U environment](01.01.installation.md##Get the ALS-U EPICS environment) section.

Important Considerations:

- This alternative repository provides builds that are functional for the training but may not be the latest official ALS-U EPICS environment production versions.

- It is not the recommended environment for actual development or deployment work at ALS-U Controls System.

- Using this path requires adjusting subsequent commands (like

source) and potentially other paths mentioned in the training materials.

Summary

In this section, you learned the fundamental steps to get started with the ALS-U EPICS environment on your local Linux x86_64 machine. You successfully cloned the environment repository using git and learned how to activate a specific version by sourcing the corresponding setEpicsEnv.bash script. Finally, you verified the setup by confirming that essential EPICS command-line tools are available in your path. You are now ready to use this configured environment for running IOCs and developing EPICS applications.

1.2 Your First Running EPICS IOC

In this section, you will run your very first EPICS Input/Output Controller (IOC) using the standard softIocPVX executable included in the environment. You will create a minimal database file defining a single Process Variable (PV) and then use essential EPICS command-line tools like caget, caput, and pvxget to interact with this PV over the network, demonstrating both Channel Access (CA) and the newer PV Access (PVA) protocols.

Lesson Overview

In this lesson, you will learn how to do the following:

- Create a minimal EPICS database (

.db) file. - Run a simple IOC using

softIocPVX. - List PVs within the running IOC using

dbl. - Interact with PVs using

cagetandpvxget(Read). - Interact with PVs using

caputandpvxput(Write). - Perform basic connection troubleshooting for CA and PVA.

Make an EPICS Database file

Please create the following EPICS database (.db) file with the name as water.db. This file defines a single Process Variable (PV).

record(ao, "temperature:water")

{

field(DESC, "Water temperature Setpoint") # Description

field( EGU, "degC") # Engineering Units

field( VAL, "0") # Initial Value

}

This file defines a record instance named temperature:water.

ao: Specifies the record type is Analog Output.DESC: Stands for Description (a text string).EGU: Defines the Engineering Units for the value.VAL: Holds the current value (and initial value) of the record.

Save this content as water.db.

Run your first softioc

Now we start the softIocPVX executable (a generic IOC program included with EPICS base) using the database file we just created.

- Open your first terminal window.

- Ensure your EPICS enviornment is sourced:

# # In Terminal 1 (IOC)

$ source ~/epics/1.1.1/debian-12/7.0.7/setEpicsEnv.bash

- Run

softIocPVX, telling it to load (-d) your database file (water.db). Make sure you run this in the same directory where you savedwater.db.

# In Terminal 1 (IOC)

$ softIocPVX -d water.db

# Expected Output (will vary slightly):

INFO: PVXS QSRV2 is loaded, permitted, and ENABLED.

Starting iocInit

############################################################################

## EPICS R7.0.7-github.com/jeonghanlee/EPICS-env

## Rev. R7.0.7-dirty

## Rev. Date Git: 2022-09-07 13:50:35 -0500

############################################################################

iocRun: All initialization complete

7.0.7 >

Leave this terminal running.

- At the IOC shell prompt (

7.0.7 >), typedbl(database list) and press Enter to see the PVs loaded:

# In Terminal 1 (IOC)

7.0.7 > dbl

temperature:water # Should list the PV you defined

7.0.7 >

Play with EPICS command line tools

Now, let’s interact with the running IOC from a different terminal.

- Open a new, separate terminal window, which we call it

Terminal 2 - Source the ALS-U EPICS environment with

disableoption, which suppress output messages in this new terminal as well:

# In Terminal 2 (CA/PVA Clients)

$ source ~/epics/1.1.1/debian-12/7.0.7/setEpicsEnv.bash "disable"

- Try reading the initial value and description using Channel Access (

caget) and PV Access (pvxget), then write new values using caput (CA) and pvxput (PVA), and read them back:

# In Terminal 2 (CA/PVA Clients)

$ caget temperature:water # Reads the VAL field (e.g., 0) using CA

$ caget temperature:water.DESC # Reads the DESC field using CA (e.g., "Water temperature Setpoint")

$ pvxget temperature:water # Reads the value using PVA (shows structure, e.g., { "value": 0.0 })

# Write value 24 using CA

$ caput temperature:water 24

# Read back using CA and PVA

$ caget temperature:water # Should show 24

$ pvxget temperature:water # Should show { "value": 24.0 }

# Write value 44 using PVA

$ pvxput temperature:water 44

# Read back using CA

$ caget temperature:water # Should show 44

The commands caget, pvxget, and caput are simple EPICS command-line clients. caget and caput use the CA (Channel Access) network protocol, while pvxget (and pvxput) use the newer PVA (PV Access) protocol available in EPICS 7+. These network protocols are fundamental to how different EPICS components (IOCs, clients, services) communicate.

Troubleshooting

If your caput or caget commands fail with a message like Channel connect timed out: PVNAME not found., it means the CA client tools cannot find your running IOC over the network.

# Example Error:

$ caget caget temperature:water

Channel connect timed out: 'caget temperature:water' not found.

When running the IOC and CA clients on the same machine (like localhost), this often happens because the default CA broadcast mechanism isn’t sufficient or is blocked. You need to explicitly tell the CA clients where to find the IOC server using an environment variable:

- Set

EPICS_CA_ADDR_LIST: In the terminal where you runcaput/caget(Terminal 2), set this variable to point to the machine running the IOC (in this case, localhost).

# In Terminal 2 (CA Clients)

$ export EPICS_CA_ADDR_LIST=localhost

- Retry the command:

# In Terminal 2 (CA Clients)

$ caget temperature:water

# Expected output (should now work, showing the current value):

temperature:water 44

- If you would like to evaluate the PVA protocol, you also have to define the following EPICS environment variable

EPICS_PVA_ADDR_LISTfor PVA (Process Variable Access) protocol. We will cover PVA protocol for more advanced lesson later.

# In Terminal 2 (PVA Clients)

$ export EPICS_PVA_ADDR_LIST=localhost

$ pvxget temperature:water

# Expected output (should now work, showing structure):

# ...

# { "value": 44.0 }

# ...

Rocky or Redhat Variant Firewall

Rocky Linux (Redhat Variant) has its own firewalld service running by default. It blocks the CA and PVA communication needed for EPICS. Thus, one should stop and disable the service for this training overall.

# Run these commands with administrator privileges (e.g., using sudo)

sudo systemctl stop firewalld # stop the firewalld service

sudo systemctl disable firewalld # unmarks the firewalld for autostart

sudo systemctl mask firewalld # prevent the firewalld from being started

Note that you can edit the firewalld configuration to allow specific ports, but this is out-of-scope of this introductory training.

Summary

In this lesson, you successfully performed the essential first steps in working with an EPICS IOC:

- Created a minimal database file (

water.db) containing an Analog Output (ao) record namedtemperature:water. - Ran a basic IOC using the

softIocPVXexecutable, loading your database file. - Verified the loaded PV using the dbl command in the IOC shell.

- Used command-line tools (

caget,caput,pvxget,pvxput) to interact with the PV over the network using both Channel Access (CA) and PV Access (PVA) protocols. - Learned basic troubleshooting steps for network connectivity issues involving

EPICS_CA_ADDR_LIST,EPICS_PVA_ADDR_LIST, and system firewalls (firewalld).

1.3 ALS-U EPICS Environment Design Principles (linux-x86_64 + OS-Specific Folders)

Note: This section discusses the underlying design choices for the EPICS environment’s architecture and cross-distribution support. While important for a deep understanding, some concepts related to build systems, EPICS_HOST_ARCH, operating system specifics, library compatibility (like glibc), and Application Binary Interfaces (ABIs) may be considered advanced. A full grasp of these details is not required for basic environment usage if following standard procedures.

Introduction

This section covers the in-depth design principles and fundamental reasons why the ALS-U EPICS environment standardizes on EPICS_HOST_ARCH=linux-x86_64 for building core EPICS and its modules, while simultaneously utilizing OS-specific folders (e.g., debian-12, rocky-8.10) to manage distribution-level differences. This combined approach addresses the significant challenge of ensuring consistent EPICS operation across different Linux distributions and their various versions, which inherently vary in system libraries, package managers, and configurations. It leverages industry standards while ensuring adaptability across supported operating systems.

Principle One: Adherence to Linux Architecture Standards (linux-x86_64)

A core principle is to align with broader Linux ecosystem standards. The use of architecture names like linux-x86_64 (instead of distribution-specific names like rocky8-x86_64) is rooted in historical conventions, standardization, and practical considerations within the Linux world. The EPICS_HOST_ARCH variable is central to the EPICS build system, guiding the selection of compiler flags, linker options, and determining the output directories for compiled binaries and libraries (e.g., bin/linux-x86_64, lib/linux-x86_64).

Historical Context

In the early days of Linux, standardized names (i386, alpha, sparc) were crucial for distinguishing builds for different CPU architectures. With the advent of 64-bit x86 architecture around 2003 (AMD64/Intel64), x86_64 quickly became the vendor-neutral standard adopted by major distributions (Debian, Red Hat, etc.). EPICS adopted these conventions for its EPICS_HOST_ARCH variable to identify build targets.

Cross-Distribution Compatibility

A common architecture name (x86_64, arm64) allows upstream projects (including EPICS itself and many modules) and developers to create builds that are fundamentally compatible at the CPU instruction set level across various Linux distributions running on that hardware.

Separation of Concerns

x86_64 describes the hardware architecture, while the distribution version (Debian 12, Rocky 8) describes the software stack (specific library versions like glibc, kernel, tools). Keeping these separate avoids confusion and simplifies compatibility reasoning, particularly concerning critical system library differences and potential ABI incompatibilities between distributions.

Stability and Longevity

Architecture names are stable, while distribution versions change frequently. Tying architecture names to distribution versions would create an unstable and cumbersome naming scheme.

Upstream and Tooling Standards

Build tools (GCC, Autotools, Make) and package managers (APT, RPM/DNF) rely on these standardized architecture names, simplifying development, builds, and packaging.

Historical Convention and Community Adoption

The standard was adopted early and changing it would cause disruption without significant benefit.

Principle Two: Avoiding Unnecessary Build Complexity

A related principle is to minimize redundant effort and complexity. Using distribution-specific architecture names like rocky8-x86_64 for EPICS_HOST_ARCH was rejected because it would lead to significant inefficiencies and risks:

- Massive Duplication of Effort: Requiring separate, nearly identical EPICS Base/module builds for every supported OS version, wasting significant build time.

- Increased Complexity and Maintenance Burden: Fragmenting build artifacts across numerous directories (e.g.,

bin/debian12-x86_64,lib/rocky8-x86_64) and multiplying the effort required for updates, patches, and testing across all targets. - Potential for Inconsistencies: Increasing the risk of subtle, unintended differences creeping into builds for different OS versions over time.

Furthermore, this structure simplifies the process of adding support for new OS versions; it typically involves creating a new OS-specific folder to manage its unique dependencies and configurations, while leveraging the existing linux-x86_64 core components.

Principle Three: Managing Distribution-Specific Variations (debian-12, rocky-8.10 Folders)

While standardizing on EPICS_HOST_ARCH=linux-x86_64 ensures architectural compatibility for the core EPICS build, it’s recognized that critical differences exist between the distributions themselves. Therefore, a key principle is to manage these variations explicitly using OS-specific folders.

Even when running on the same x86_64 hardware, distributions like Debian 12 and Rocky Linux 8 differ in:

- System Library Versions: Versions of core libraries like

glibc,libstdc++,OpenSSL,readline, etc., can vary, potentially leading to runtime errors if a binary built against a newer library is run on a system with an older one. - Package Management: Different tools (

aptvs.dnf) and package naming conventions require distinct procedures for installing prerequisites. - Filesystem Layout: Standard locations for libraries, headers, or configuration files might differ slightly, requiring path adjustments.

- Available Dependencies: Specific versions or availability of required third-party tools and libraries (e.g., compilers, Python versions, specific development packages like

libreadline-devvsreadline-devel) can vary.

The OS-specific folders (debian-12, rocky-8.10, etc.) within the ALS-U EPICS environment are designed according to the principle of isolating and managing these distribution-level variations. They act as adapters, providing the necessary “glue” for the standardized linux-x86_64 binaries to function correctly on each specific OS. Their purpose typically includes:

- Managing OS Dependencies: Providing manifests or scripts listing required OS packages (e.g., specific library versions, tools) to be installed via the native package manager (

aptordnf). - Providing Wrapper Scripts: Using scripts to handle differences in paths (e.g., adjusting

PATH) or setting necessary environment variables (likeLD_LIBRARY_PATH, used carefully) needed for tools on a specific OS. - Hosting Pre-compiled Dependencies (If Necessary): In some cases, they might contain specific external libraries or tools pre-compiled for that particular OS version if they cannot be easily managed otherwise.

- Symbolic Links: Pointing to the correct version or location of system libraries if needed to resolve path or version conflicts.

- Configuration Overrides: Potentially working with EPICS build system overrides (like

CONFIG_SITE.*files) to define distribution-specific library paths or compiler flags needed when building applications against the core EPICS installation on that OS.

This allows the core EPICS components (Base, support modules, IOC applications), built once for the common linux-x86_64 architecture, to function correctly by resolving distribution-specific needs through these dedicated folders.

A Combined Approach for Robustness, Adaptability, and Long-Term Simplicity

The ALS-U EPICS environment design principles culminate in a strategic combination:

- Follow Standards: Adhere to the stable, standardized

linux-x86_64architecture identifier (viaEPICS_HOST_ARCH) for building core EPICS components, maximizing compatibility and following widespread Linux/EPICS conventions. - Isolate and Manage Variation: Utilize OS-specific folders (e.g., debian-12, rocky-8.10) to effectively manage the unavoidable differences in dependencies, paths, and configurations between specific Linux distributions.

This hybrid approach results in an environment that is not only robust and adaptable but also significantly simpler for long-term maintenance. It benefits from architectural standardization while precisely accommodating the nuances of supported operating systems. Crucially, it avoids the significant inefficiencies and maintenance overhead associated with building and managing separate, nearly identical EPICS cores for each OS version.

Furthermore, this clear separation simplifies lifecycle management. This is especially critical considering the typical lifecycle mismatch: operating systems often have support lifetimes of only 5-10 years, whereas accelerator control systems must remain operational and maintainable for potentially 25 years or more. By building the core EPICS components against the stable linux-x86_64 architecture, we decouple them from specific, transient OS versions.

The OS-specific folders then act as an adaptable layer, allowing the long-lived core system to integrate with whichever operating systems are current during its extended lifespan. Adding support for a new OS version primarily involves creating its specific folder, while decommissioning support for an end-of-life OS becomes a straightforward task of removing its folder.

This approach ensures the control system’s long-term viability and simplifies OS migration paths over decades, without requiring fundamental re-engineering of the core components within EPICS base and its IOC applications overall. This focus on a standardized core with isolated adaptations is key to maintaining a manageable and sustainable EPICS environment over its required operational lifetime.

Chapter 2: First EPICS IOC and GitLab CI

Now that your environment is set up, this chapter walks you through creating your first EPICS Input/Output Controller (IOC) within the ALS-U EPICS Environment. You will also learn how to integrate it with GitLab Continuous Integration (CI) for automated testing and development workflows.

This chapter covers the following topics:

- First EPICS IOC: Building a basic EPICS IOC from scratch.

- Expand the First IOC: Adding more functionality to your initial IOC.

- Continuous Integration: Setting up GitLab CI pipelines for your IOC project.

2.1 Your First ALS-U EPICS IOC

This section provides your first hands-on experience creating an Input/Output Controller (IOC) using the standardized ALS-U EPICS environment tools. You will clone the template generator repository, use the generate_ioc_structure.bash script to create a basic IOC skeleton based on a name and location, explore the fundamental configuration files found in the configure and mouseApp directories (specifically RELEASE and Makefile), and learn the essential commands (make, ./st.cmd) to build and execute your first simple IOC instance.

Lesson Overview

In this lesson, you will learn how to:

- Generate and execute the IOC using the ALS-U IOC template generator

- Understand the purpose of key generated folders (

configure,iocBoot,mouseApp). - Understand the role of two important files (

configure/RELEASE,mouseApp/src/Makefile) and their relationship. - Understand basic EPICS IOC build and execution commands (

make,st.cmd,make clean,make distclean).

Introduction to Using the Template Generator

To build an EPICS Input Output Controller (IOC), there are plenty of ways to do so. However, within ALS-U, we aim for a consistent method for building our EPICS IOCs using a standardized template. This consistency makes it much easier to understand, maintain, and collaborate on IOCs developed by different team members.

Remembering all the necessary database definition (.dbd) files and dependent library links required to build even a simple IOC can be cumbersome and error-prone. To address this, we use the ALS-U EPICS template generator (found in the tools repository on the ALS GitLab server), which automates the creation of a standard IOC structure with correctly configured Makefiles.

Prerequisites

- SSH Access: Users need SSH access to the ALS GitLab repository to clone the tools repository. Contact the controls group if you need access.

- EPICS Environment: A working ALS-U EPICS environment (as set up in Chapter 1) must be available and sourced in your terminal.

- Basic Linux Skills: Familiarity with basic commands like

cd,ls,mkdir,source,bash. - Text Editor: Access to a text editor (nano, vi, emacs, etc.) for examining files.

Download the tools into your local machine

First, ensure you have the template generator tools. Clone the tools repository from the ALS GitLab server into a suitable location (e.g., your home directory or a development workspace). You only need to do this once.

# Example: Clone into your home directory

# Example for the ALS-U Internal Gitlab (Official)

$ cd ~

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/tools.git

# or

# Example for the Mirror site on GitHub

$ git clone https://github.com/jeonghanlee/EPICS-IOC-template-tools.git tools

This will create a tools directory containing the generator script.

The generate_ioc_structure.bash Script

Located within the tools repository, this script automates the creation of the standard ALS-U IOC directory structure, significantly reducing the manual workflow potentially outlined in older development guides.

It requires two mandatory options:

-p<APPNAME>: The Device Name or primary application name for your IOC (e.g.,mouse).-l<LOCATION>: The location identifier for your IOC (e.g.,home). These names must be chosen according to the official IOC Name Naming Convention document to maintain consistency across ALS-U.

First example: Creating, Building, and Running the mouse IOC

Let’s create a very simple example IOC using the generator. We will use mouse as the APPNAME and home as LOCATION. Note that we use the following assumption that the APPNAME is the same as an IOC application name. However, in the reality, sometime, it is difficult to keep that assumption consistently. We will cover that case later.

- Ensure Environment is Active: Open a terminal and source the desired ALS-U EPICS environment setup script:

# Use the correct path for your setup

$ source ~/epics/1.1.1/debian-12/7.0.7/setEpicsEnv.bash

- Generate the IOC Structure: Run the script from the directory containing the tools directory (or provide the full path to the script).

# Assuming 'tools' directory is in your current path"s parent or provide full path

$ bash tools/generate_ioc_structure.bash -l home -p mouse

# This will create a new directory named 'mouse'

- Navigate into the IOC Directory:

$ cd mouse

- Build the IOC: Use the make command. This invokes the EPICS build system, which reads the Makefiles, compiles necessary components (like support for standard records), links libraries, and installs the runtime files.

mouse $ make

Watch for any error messages during the build.

- Navigate to the Boot Directory: The runnable IOC instance files are placed in a specific subdirectory within iocBoot. The naming convention is typically

ioc<LOCATION>-<APPNAME>.

mouse $ cd iocBoot/iochome-mouse

- Run the IOC: Execute the startup script st.cmd. This script contains commands interpreted by the EPICS IOC shell to load configurations, databases (if any), start background processes, and initialize the IOC.

# Prompt shows you are inside the boot directory

iochome-mouse $ ./st.cmd

You should see EPICS startup messages, version information, and finally the IOC shell prompt (e.g., 7.0.7 >), indicating the IOC is running. To stop it later, press Ctrl+C or type exit at the prompt.

(The tools script supports more complex scenarios, but this covers the fundamental generate-build-run cycle.)

Exploring the Generated Folders

After running the generator and make, several directories are created. Let’s examine the three most important ones for developers:configure, iocBoot, and mouseApp.

A Configuration Files Folder (configure)

This folder contains several predefined configuration files that work well within the standard EPICS building system.

In configure, RELEASE and CONFIG_SITE are the files most often opened and updated. Please open configure/CONFIG_SITE first to review its contents.

With the ALS-U EPICS environment and how to deploy the EPICS IOC within the ALS-U production environment, we rarely update the CONFIG_SITE.

In this folder, you will primarily care about the RELEASE file, as the template-generated configuration files will likely not need modification.

Please open the RELEASE file. You will see the following templated generated content:

...

# Variables and paths to dependent modules:

MODULES = $(EPICS_BASE)/../modules

#MYMODULE = $(MODULES)/my-module

# If using the sequencer, point SNCSEQ at its top directory:

#ALS_IOCSH = $(MODULES)/iocsh

#ASYN = $(MODULES)/asyn

#SNCSEQ = $(MODULES)/seq

#MODBUS = $(MODULES)/modbus

#SNMP = $(MODULES)/snmp

#STD = $(MODULES)/std

#CALC = $(MODULES)/calc

#AUTOSAVE = $(MODULES)/autosave

#RECCASTER = $(MODULES)/recsync

#### STREAM = $(MODULES)/stream

# ALS-U use "StreamDevice" as a directory name

# STREAM = $(MODULES)/StreamDevice

#RETOOLS = $(MODULES)/retools

#CAPUTLOG = $(MODULES)/caPutLog

#### devIocStats : EPICS community iocStats

#### STREAM = $(MODULES)/stream

# ALS-U use "StreamDevice" as a directory name

# STREAM = $(MODULES)/StreamDevice

#RETOOLS = $(MODULES)/retools

#CAPUTLOG = $(MODULES)/caPutLog

#### devIocStats : EPICS community iocStats

#devIocStats = $(MODULES)/iocStats

#### IOCSTATS : ALS specific iocStats

#IOCSTATS = $(MODULES)/iocStatsALS

#MEASCOMP = $(MODULES)/measComp

#SSCAN=$(MODULES)/sscan

#BUSY=$(MODULES)/busy

#SCALER=$(MODULES)/scaler

#MCA=$(MODULES)/mca

#ETHER_IP=$(MODULES)/ether_ip

#

### ALS-U Site Specific Modules

### VACUUM Modules

#RGAMV2=$(MODULES)/rgamv2

#UNIDRV=$(MODULES)/unidrv

#QPC=$(MODULES)/qpc

### Instrumentation

#EVENTGENERATORSUP=$(MODULES)/eventGeneratorSup

#BPMSUP=$(MODULES)/bpmSup

### FEED for LLRF

#FEED=$(MODULES)/feed

### PSC Modules

#PSCDRV=$(MODULES)/pscdrv

### MOTION Modules

#PMAC=$(MODULES)/pmac

#

### ALS-U Default Module

PVXS=$(MODULES)/pvxs

# EPICS_BASE should appear last so earlier modules can override stuff:

EPICS_BASE = /home/jeonglee/epics/1.1.1/debian-12/7.0.7/base

...

This file contains EPICS module dependencies. You only need to remove the # symbol before the required modules for your own IOC application. The EPICS_BASE environment variable (set when you sourced the environment) takes precedence over the value in this file during the generation of your ioc structure by a template generator. Therefore, for this workflow, you typically don’t need to modify the EPICS_BASE line here. We will revisit this file with a practical exercise later in the guidebook.

IOC Startup Folder (iocBoot/iochome-mouse)

This directory contains the files required to run a specific configured instance of your IOC application. The subdirectory name (iochome-mouse) combines the location (home) and application name (mouse).

-

st.cmd(Startup Script): This is the main file executed to start the IOC. It’s a script containing commands for the EPICS IOC shell. Its primary responsibilities include:- Loading the compiled database definitions (

dbLoadDatabase). - Registering device and record support (

*registerRecordDeviceDriver). - Loading specific Process Variable instances from database files (

dbLoadRecords- none in this simple example). - Configuring hardware communication (none in this example).

- Performing final initialization and starting IOC processing (

iocInit). The template generator creates a basicst.cmdfile that handles the essentials for starting a simple IOC. You will modify this file frequently as you add databases and hardware support.

- Loading the compiled database definitions (

Application Source Folder (mouseApp/src)

This directory and its subdirectories (Db, src) contain the source code specific to your IOC application (mouse in this case).

mouseApp/Db/: The conventional location for your database files (.db,.proto,.template,.substitutions). (We will add files here in later lessons).mouseApp/src/: The conventional location for your C/C++ source code files, if you write custom device support, sequence programs, or other code.mouseApp/src/Makefile: This Makefile handles compiling your custom C/C++ code (if any) and linking it with EPICS Base and required module libraries. It works closely withconfigure/RELEASE.

Examine the mouseApp/src/Makefile. Note the ifneq blocks:

TOP=../..

include $(TOP)/configure/CONFIG

#----------------------------------------

# ADD MACRO DEFINITIONS AFTER THIS LINE

#=============================

#=============================

# Build the IOC application

PROD_IOC = mouse

# mouse.dbd will be created and installed

DBD += mouse.dbd

Common_DBDs += base.dbd

Common_DBDs += system.dbd

Common_SRCs +=

ifneq ($(ASYN),)

Common_DBDs += asyn.dbd

Common_DBDs += drvAsynIPPort.dbd

Common_DBDs += drvAsynSerialPort.dbd

Common_LIBs += asyn

endif

ifneq ($(MODBUS),)

Common_DBDs += modbusSupport.dbd

Common_LIBs += modbus

endif

ifneq ($(SNMP),)

Common_DBDs += devSnmp.dbd

Common_LIBs += devSnmp

SYS_PROD_LIBS += netsnmp

endif

...

- Automatic Dependencies: Similar to the overview step, these

ifneq ($(MODULE),)blocks automatically include the necessary database definition files (.dbd) and link the required module libraries only if you have uncommented the correspondingMODULEvariable inconfigure/RELEASE. This significantly simplifies managing build dependencies. For this first basic IOC, you don’t need to modify this file because you haven’t added any dependencies inRELEASEor any custom C code inCommon_SRCs.

A Few Useful Build Commands

Here are essential make commands used for building and cleaning EPICS IOCs, typically run from the top-level IOC directory (e.g., mouse):

make: (Default target) Compiles changed source files, processes database definitions, links libraries, and installs the executable and runtime files intobin/,lib/,dbd/,db/. This is the command you use most often to build or update your IOC.make clean: Removes intermediate files generated during the build (like.oobject files, dependency files). It generally leaves the final installed files inbin/,lib/,db/,dbd/. Use this when you want to force recompilation of source files.make distclean: Performs a much more thorough cleanup. It removes almost everything created bymakeandmake clean, including thebin,lib,dbddirectories and installed files indb. It aims to return the directory tree closer to its state immediately after generation or cloning. Usedistcleanif you suspect build problems caused by leftover files or want a completely fresh build from scratch.

You can observe the effects of these commands by examining the contents of your IOC folder (e.g., using ls -lR or tree) before and after running them.

2.2 Expanding Your First IOC: Adding Another IOC

Building upon your first IOC, this section demonstrates how the ALS-U environment facilitates managing multiple, related IOC instances from a single, centralized codebase repository (identified by a unique APPNAME). You will learn to use different options (-l LOCATION, -d device name, -f FOLDER) of the generate_ioc_structure.bash tool to add new IOC configurations (creating new subdirectories within iocBoot) while reusing the core application code found in <APPNAME>App. The script’s validation logic (like enforcing case-sensitivity for APPNAME) and the benefits of this shared codebase approach for maintenance and collaboration are highlighted through practical examples.

Lesson Overview

In this lesson, you will learn how to:

- Add a new IOC application with a different

LOCATIONto the existingAPPNAME(device name). - Add a new IOC application with a different “application name” (a unique identifier for this new IOC instance) and

LOCATION, while still using the sameAPPNAME(device name). - Add a new IOC application with a different “application name”,

LOCATION, and a git clone folder name (repository name), while still using the sameAPPNAME(device name)

Case 1: Your IOC Application name does match with the IOC repository APPNAME

This is the most common and preferred case. We start with the step of cloning tools and mouse from scratch.

# Example for the ALS-U Internal Gitlab (Official)

# You can use the mirror site instead.

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/tools.git

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/sandbox/jeonglee-mouse.git mouse # note that we have to use `mouse` folder name here, as this will be the `APPNAME` used in the subsequent `generate_ioc_structure.bash` command.

Now we would like to create an IOC with mouse as the APPNAME and park as LOCATION with the same git folder or repository name mouse.

$ bash tools/generate_ioc_structure.bash -l park -p mouse

Your Location ---park--- was NOT defined in the predefined ALS/ALS-U locations

----> gtl ln ltb inj br bts lnrf brrf srrf arrf bl acc als cr ar01 ar02 ar03 ar04 ar05 ar06 ar07 ar08 ar09 ar10 ar11 ar12 sr01 sr02 sr03 sr04 sr05 sr06 sr07 sr08 sr09 sr10 sr11 sr12 bl01 bl02 bl03 bl04 bl05 bl06 bl07 bl08 bl09 bl10 bl11 bl12 fe01 fe02 fe03 fe04 fe05 fe06 fe07 fe08 fe09 fe10 fe11 fe12 alsu bta ats sta lab testlab

>>

>>

>> Do you want to continue (Y/n)? Y

>> We are moving forward .

>> We are now creating a folder with >>> mouse <<<

>> If the folder is exist, we can go into mouse

>> in the >>> /home/jeonglee/AAATemps/sandbox <<<

>> Entering into /home/jeonglee/AAATemps/sandbox/mouse

>> makeBaseApp.pl -t ioc

mouse exists, not modified.

>>> Making IOC application with IOCNAME park-mouse and IOC iocpark-mouse

>>>

>> makeBaseApp.pl -i -t ioc -p mouse

>> makeBaseApp.pl -i -t ioc -p park-mouse

Using target architecture linux-x86_64 (only one available)

>>>

>>> IOCNAME : park-mouse

>>> IOC : iocpark-mouse

>>> iocBoot IOC path /home/jeonglee/AAATemps/sandbox/mouse/iocBoot/iocpark-mouse

Exist : .gitlab-ci.yml

Exist : .gitignore

Exist : .gitattributes

>> leaving from /home/jeonglee/AAATemps/sandbox/mouse

>> We are in /home/jeonglee/AAATemps/sandbox

Please enter mouse folder, and execute tree command

$ cd mouse/

mouse $ tree --charset=ascii -L 2

.

|-- configure

| |-- CONFIG

| |-- CONFIG_IOCSH

| |-- CONFIG_SITE

| |-- Makefile

| |-- RELEASE

| |-- RULES

| |-- RULES_ALSU

| |-- RULES_DIRS

| |-- RULES.ioc

| `-- RULES_TOP

|-- docs

| |-- README_autosave.md

| `-- SoftwareRequirementsSpecification.md

|-- iocBoot

| |-- iochome-mouse

| |-- iocpark-mouse

| `-- Makefile

|-- Makefile

|-- mouseApp

| |-- Db

| |-- iocsh

| |-- Makefile

| `-- src

`-- README.md

10 directories, 16 files

Now, you can see there are two folders, iochome-mouse and iocpark-mouse, in the iocBoot folder. These two folders represent your different IOC applications based on the same mouse EPICS IOC code repository.

Case 2: Your IOC Application name does not match with the IOC APPNAME

This happens frequently when you work in the existing IOC application. We start with the step of cloning tools and mouse from scratch.

# Example for the ALS-U Internal Gitlab (Official)

# You can use the mirror site instead.

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/tools.git

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/sandbox/jeonglee-mouse.git mouse # note that we have to use `mouse` folder name here, as this corresponds to the `APPNAME` we will use in the next step, even though the IOC application name will be different.

Now we would like to create an IOC with woodmouse as the IOC application name (using the -d option), park as LOCATION, within the same Git repository named mouse based on the APPNAME as mouse.

$ bash tools/generate_ioc_structure.bash -l park -p mouse -d woodmouse

Your Location ---park--- was NOT defined in the predefined ALS/ALS-U locations

----> gtl ln ltb inj br bts lnrf brrf srrf arrf bl acc als cr ar01 ar02 ar03 ar04 ar05 ar06 ar07 ar08 ar09 ar10 ar11 ar12 sr01 sr02 sr03 sr04 sr05 sr06 sr07 sr08 sr09 sr10 sr11 sr12 bl01 bl02 bl03 bl04 bl05 bl06 bl07 bl08 bl09 bl10 bl11 bl12 fe01 fe02 fe03 fe04 fe05 fe06 fe07 fe08 fe09 fe10 fe11 fe12 alsu bta ats sta lab testlab

>>

>>

>> Do you want to continue (Y/n)?

>> We are moving forward .

>> We are now creating a folder with >>> mouse <<<

>> If the folder is exist, we can go into mouse

>> in the >>> /home/jeonglee/AAATemps/sandbox <<<

>> Entering into /home/jeonglee/AAATemps/sandbox/mouse

>> makeBaseApp.pl -t ioc

mouse exists, not modified.

>>> Making IOC application with IOCNAME park-woodmouse and IOC iocpark-woodmouse

>>>

>> makeBaseApp.pl -i -t ioc -p mouse

>> makeBaseApp.pl -i -t ioc -p park-woodmouse

Using target architecture linux-x86_64 (only one available)

>>>

>>> IOCNAME : park-woodmouse

>>> IOC : iocpark-woodmouse

>>> iocBoot IOC path /home/jeonglee/AAATemps/sandbox/mouse/iocBoot/iocpark-woodmouse

Exist : .gitlab-ci.yml

Exist : .gitignore

Exist : .gitattributes

>> leaving from /home/jeonglee/AAATemps/sandbox/mouse

>> We are in /home/jeonglee/AAATemps/sandbox

You can see the iocBoot/iocpark-woodmouse folder, and we also have the same mouseApp folder.

$ tree --charset=ascii -L 2 mouse/

mouse/

|-- configure

| |-- CONFIG

| |-- CONFIG_IOCSH

| |-- CONFIG_SITE

| |-- Makefile

| |-- RELEASE

| |-- RULES

| |-- RULES_ALSU

| |-- RULES_DIRS

| |-- RULES.ioc

| `-- RULES_TOP

|-- docs

| |-- README_autosave.md

| `-- SoftwareRequirementsSpecification.md

|-- iocBoot

| |-- iochome-mouse

| |-- iocpark-mouse

| |-- iocpark-woodmouse

| `-- Makefile

|-- Makefile

|-- mouseApp

| |-- Db

| |-- iocsh

| |-- Makefile

| `-- src

`-- README.md

11 directories, 16 files

Now, we can revisit the folders iochome-mouse, iocpark-mouse, and iocpark-woodmouse shortly. Please check the difference among iochome-mouse, iocpark-mouse, and iocpark-woodmouse. You can do this with a generic Linux command-line tool, such as diff.

iocBoot $ diff iochome-mouse/st.cmd iocpark-mouse/st.cmd

35,36c35,36

< epicsEnvSet("IOCNAME", "home-mouse")

< epicsEnvSet("IOC", "iochome-mouse")

---

> epicsEnvSet("IOCNAME", "park-mouse")

> epicsEnvSet("IOC", "iocpark-mouse")

64c64

< #--asSetFilename("$(DB_TOP)/access_securityhome-mouse.acf")

---

> #--asSetFilename("$(DB_TOP)/access_securitypark-mouse.acf")

iocBoot $ diff iochome-mouse/st.cmd iocpark-woodmouse/st.cmd

35,36c35,36

< epicsEnvSet("IOCNAME", "home-mouse")

< epicsEnvSet("IOC", "iochome-mouse")

---

> epicsEnvSet("IOCNAME", "park-woodmouse")

> epicsEnvSet("IOC", "iocpark-woodmouse")

64c64

< #--asSetFilename("$(DB_TOP)/access_securityhome-mouse.acf")

---

> #--asSetFilename("$(DB_TOP)/access_securitypark-woodmouse.acf")

Historically, the variables IOC and IOCNAME have been a source of confusion. Therefore, we want to define them clearly from the outset, as these variables are used extensively to identify your IOC in the production environment.

Case 3: Your clone folder name does not match with the IOC APPNAME (directory)

In practice, developers may encounter situations where the name of the cloned Git repository folder differs from the IOC’s APPNAME (device name). The recommended practice within the ALS-U EPICS environment is to ensure that the Git repository name matches the primary APPNAME (device name) of the IOC it contains, especially at the beginning of the development workflow. However, we also need to accommodate existing IOC applications and provide developers with a more flexible solution for their Git workflow (clone, branch, or fork).

# Example for the ALS-U Internal Gitlab (Official)

# You can use the mirror site instead.

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/tools.git

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/sandbox/jeonglee-mouse.git

Here we use bts, which is case-sensitive and defined in our predefined location list, as LOCATION.

$ bash tools/generate_ioc_structure.bash -l bts -p mouse -f jeonglee-mouse

The following ALS / ALS-U locations are defined.

----> gtl ln ltb inj br bts lnrf brrf srrf arrf bl acc als cr ar01 ar02 ar03 ar04 ar05 ar06 ar07 ar08 ar09 ar10 ar11 ar12 sr01 sr02 sr03 sr04 sr05 sr06 sr07 sr08 sr09 sr10 sr11 sr12 bl01 bl02 bl03 bl04 bl05 bl06 bl07 bl08 bl09 bl10 bl11 bl12 fe01 fe02 fe03 fe04 fe05 fe06 fe07 fe08 fe09 fe10 fe11 fe12 alsu bta ats sta lab testlab

Your Location ---bts--- was defined within the predefined list.

>> We are now creating a folder with >>> jeonglee-mouse <<<

>> If the folder is exist, we can go into jeonglee-mouse

>> in the >>> /home/jeonglee/AAATemps/sandbox <<<

>> Entering into /home/jeonglee/AAATemps/sandbox/jeonglee-mouse

>> makeBaseApp.pl -t ioc

mouse exists, not modified.

>>> Making IOC application with IOCNAME bts-mouse and IOC iocbts-mouse

>>>

>> makeBaseApp.pl -i -t ioc -p mouse

>> makeBaseApp.pl -i -t ioc -p bts-mouse

Using target architecture linux-x86_64 (only one available)

>>>

>>> IOCNAME : bts-mouse

>>> IOC : iocbts-mouse

>>> iocBoot IOC path /home/jeonglee/AAATemps/sandbox/jeonglee-mouse/iocBoot/iocbts-mouse

Exist : .gitlab-ci.yml

Exist : .gitignore

Exist : .gitattributes

>> leaving from /home/jeonglee/AAATemps/sandbox/jeonglee-mouse

>> We are in /home/jeonglee/AAATemps/sandbox

$ tree --charset=ascii -L 2 jeonglee-mouse/

jeonglee-mouse/

|-- configure

| |-- CONFIG

| |-- CONFIG_IOCSH

| |-- CONFIG_SITE

| |-- Makefile

| |-- RELEASE

| |-- RULES

| |-- RULES_ALSU

| |-- RULES_DIRS

| |-- RULES.ioc

| `-- RULES_TOP

|-- docs

| |-- README_autosave.md

| `-- SoftwareRequirementsSpecification.md

|-- iocBoot

| |-- iocbts-mouse

| |-- iochome-mouse

| `-- Makefile

|-- Makefile

|-- mouseApp

| |-- Db

| |-- iocsh

| |-- Makefile

| `-- src

`-- README.md

10 directories, 16 files

The -f option allows us to specify the existing top-level folder (jeonglee-mouse in this case) where the IOC application structure should be created. This is useful when the cloned repository name does not match the desired APPNAME for the IOC.

Case 4: Your clone folder name does not match with the IOC APPNAME (directory) and you use the wrong application name.

# Example for the ALS-U Internal Gitlab (Official)

# You can use the mirror site instead.

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/tools.git

$ git clone ssh://git@git-local.als.lbl.gov:8022/alsu/sandbox/jeonglee-mouse.git

Here we use ar05, which is case-sensitive and defined in our predefined location list, as LOCATION. However, we use the wrong application name mOuse with incorrect casing.

$ tools/generate_ioc_structure.bash -l ar05 -p mOuse -f jeonglee-mouse

The following ALS / ALS-U locations are defined.

----> gtl ln ltb inj br bts lnrf brrf srrf arrf bl acc als cr ar01 ar02 ar03 ar04 ar05 ar06 ar07 ar08 ar09 ar10 ar11 ar12 sr01 sr02 sr03 sr04 sr05 sr06 sr07 sr08 sr09 sr10 sr11 sr12 bl01 bl02 bl03 bl04 bl05 bl06 bl07 bl08 bl09 bl10 bl11 bl12 fe01 fe02 fe03 fe04 fe05 fe06 fe07 fe08 fe09 fe10 fe11 fe12 alsu bta ats sta lab testlab

Your Location ---ar05--- was defined within the predefined list.

>> We are now creating a folder with >>> jeonglee-mouse <<<

>> If the folder is exist, we can go into jeonglee-mouse

>> in the >>> /home/jeonglee/AAATemps/sandbox <<<

>> Entering into /home/jeonglee/AAATemps/sandbox/jeonglee-mouse

>> We detected the APPNAME is the different lower-and uppercases APPNAME.

>> APPNAME : mOuse should use the same as the existing one : mouse.

>> Please use the CASE-SENSITIVITY APPNAME to match the existing APPNAME

Usage : tools/generate_ioc_structure.bash [-l LOCATION] [-d DEVICE] [-p APPNAME] [-f FOLDER] <-a>

-l : LOCATION - Standard ALS IOC location name with a strict list. Beware if you ignore the standard list!

-p : APPNAME - Case-Sensitivity

-d : DEVICE - Optional device name for the IOC. If specified, IOCNAME=LOCATION-DEVICE. Otherwise, IOCNAME=LOCATION-APPNAME

-f : FOLDER - repository, If not defined, APPNAME will be used

bash tools/generate_ioc_structure.bash -p APPNAME -l Location -d Device

bash tools/generate_ioc_structure.bash -p APPNAME -l Location -d Device -f Folder

In this case, the template generator will provide an explanation and will not proceed with the creation of a new IOC application. This is to enforce consistency in the APPNAME casing within the repository, aligning with the principle of keeping similar IOC codes together for better maintenance and collaboration.

Assignments

-

Compile and Run Your IOC Applications: Navigate into the top-level directory of your IOC repository (e.g., mouse or jeonglee-mouse). For each of the IOC applications you created (e.g.,

iocpark-mouse,iocpark-woodmouse,iocbts-mouse), compile the code using the make command in the top-level directory. Then, navigate into the respective iocBoot subdirectory (e.g.,iocBoot/iocpark-mouse) and run the IOC using the./st.cmdcommand. -

Push Your Local Changes: Ensure you have added all your changes using

git add .and committed them with a descriptive message usinggit commit -m "Your commit message". Then, push your local changes to your sandbox repository on GitLab.

2.3 GitLab Continuous Integration for IOC Development

This section details the Continuous Integration (CI) process implemented within the ALS-U GitLab environment, designed to standardize the building and testing of EPICS IOCs. You will learn how IOC projects include configuration from a central CI project (alsu/ci), understand the standard pipeline stages (like build and test), and crucially, discover how to conditionally incorporate necessary ALS-U site-specific modules into your CI builds using a simple .sitemodules trigger file. A hands-on walkthrough demonstrates pushing your IOC code to GitLab and observing the CI pipeline behavior both before and after enabling site module support.

Lesson Overview

In this documentation, you will learn how to do the following:

- Understand how ALS-U GitLab CI configuration is applied to your IOC project.

- Conditionally add site-specific modules to CI builds using the

.sitemodulesfile. - Create a GitLab repository for an IOC and observe the CI pipeline execution.

Key Features

- Centralized CI Configuration: Includes and extends the CI from

alsu/cifor consistent ALS-U IOC development. - Modular Design: Allows customization based on specific IOC needs.

- Multi-OS Support: Specific configurations for Debian 12, Rocky Linux 8, and Rocky Linux 9 for EPICS.

- Defined Stages: CI process includes

buildandteststages.

Quick Start: Integrating CI into Your IOC Project

The ALS-U IOC template generator (tools/generate_ioc_structure.bash) automatically creates a .gitlab-ci.yml file in your IOC’s root directory. This file enables CI integration by referencing configurations from the central alsu/ci project. You typically do not need to create or manually edit the file, however understanding its components is helpful.

include:

# Reference files from the 'alsu/ci' project, using the 'master' branch

# A specific tag/commit could be used instead of 'master' for long-term stability

- project: alsu/ci

ref: master

file:

# Core workflow rules and variables

- 'workflow.yml'

- 'alsu-vars.yml'

# Defines jobs related to site module handling

- 'env-sitemodules.yml'

# Defines EPICS build/test jobs for different OS targets

- 'debian12-epics.yml'

- 'rocky8-epics.yml'

- 'rocky9-epics.yml'

# --- Optional references (uncomment if needed) ---

# - 'debian12-analyzers.yml'

# - 'rocky8-analyzers.yml'

# - 'rocky9-analyzers.yml'

stages:

- build

- test

# - analyzers # Uncomment if analyzer stage jobs are referenced above

# - deploy # Uncomment if deploy stage jobs are referenced (if implemented in alsu/ci)

Understanding the Included Files

The include: section references several YAML files from the central alsu/ci project. Here’s a brief overview of their purpose:

workflow.yml: Defines the simple rules for all CI workflow.alsu-vars.yml: Contains common variables (like default EPICS versions, paths), configurations, and default settings used across various ALS-U CI jobs.env-sitemodules.yml: Handles environment setup and site module inclusion via.sitemodules.debian12-epics.yml,rocky8-epics.yml,rocky9-epics.yml: Define build/test for EPICS IOCs on respective OS.debian12-analyzers.yml,rocky8-analyzers.yml,rocky9-analyzers.yml: (Commented out by default) Provide template jobs for running static code analysis; users must implement the actual tool invocation commands within their project’s.gitlab-ci.ymlif they uncomment these references.

Conditional Inclusion of Site Modules using .sitemodules

The standard GitLab runners (Docker images with an OS and the default ALS-U EPICS environment) do not contain pre-built ALS-U site-specific EPICS modules, such as:

- Vacuum

dev-mks-mv2 - Vacuum

dev-mks937b-unidrv - Vacuum

dev-gamma-pctrl - Instrumentation

dev-evg-support - Instrumentation

dev-bpm-support - RF

dev-feed-support

If your IOC requires one or more of these site-specific modules, you need to signal this to the CI pipeline. This is done by creating a file named .sitemodules in the top-level directory of your IOC repository.

The CI pipeline (specifically jobs defined in env-sitemodules.yml) detects the presence of this file. If .sitemodules exists, the CI will automatically add a predefined set of common site modules to the build environment (by cloning them) before compiling your IOC. Based on the example walkthrough below, simply creating a .sitemodules file seems sufficient to trigger this. (Verify this mechanism if your requirements differ or if specific modules need to be listed within the file in some cases).

CI Stages Explaned

The stages section in your .gitlab-ci.yml defines the different phases of your CI pipeline. The current configuration includes:

build: Compiles your IOC application code against the target EPICS environment (potentially augmented with site modules if.sitemodulesis present).test: Intended for running automated tests. Currently, the default jobs referenced might only execute basic checks or serve as placeholders for user-defined tests. The following stages are often available via the central CI project but commented out by default in the template:analyzers: Reserved for jobs that perform static code analysis. Users need to configure the specific tools and commands if they reference the corresponding analyzer files.deploy: Could contain jobs for deploying build artifacts, documentation, or tagging releases.

Let’s do this! (Hands-On Example)

This walkthrough shows how to push the mouse IOC (created in a previous lesson) to a new GitLab repository and observe the CI pipeline.

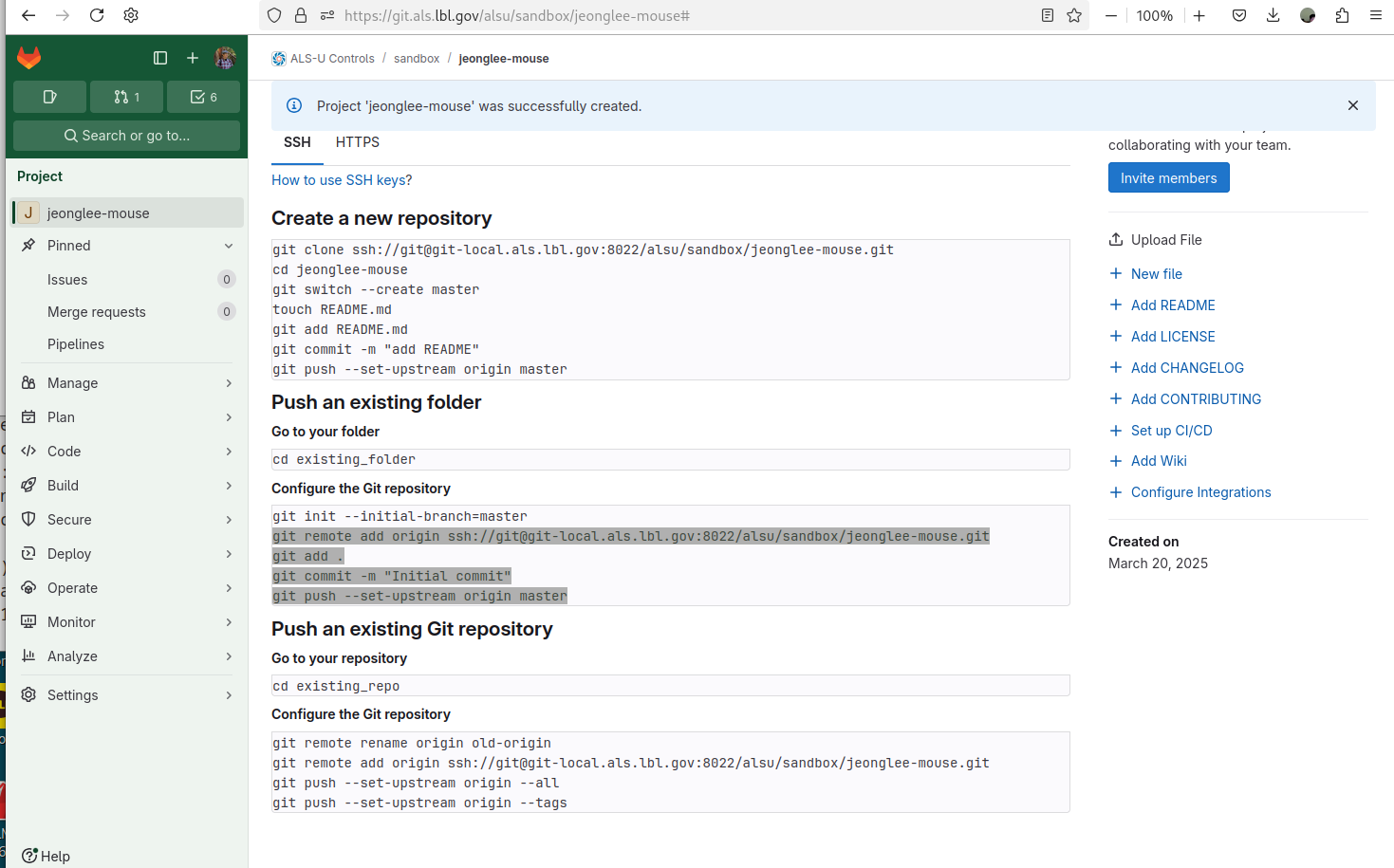

Create Your First Repository on GitLab

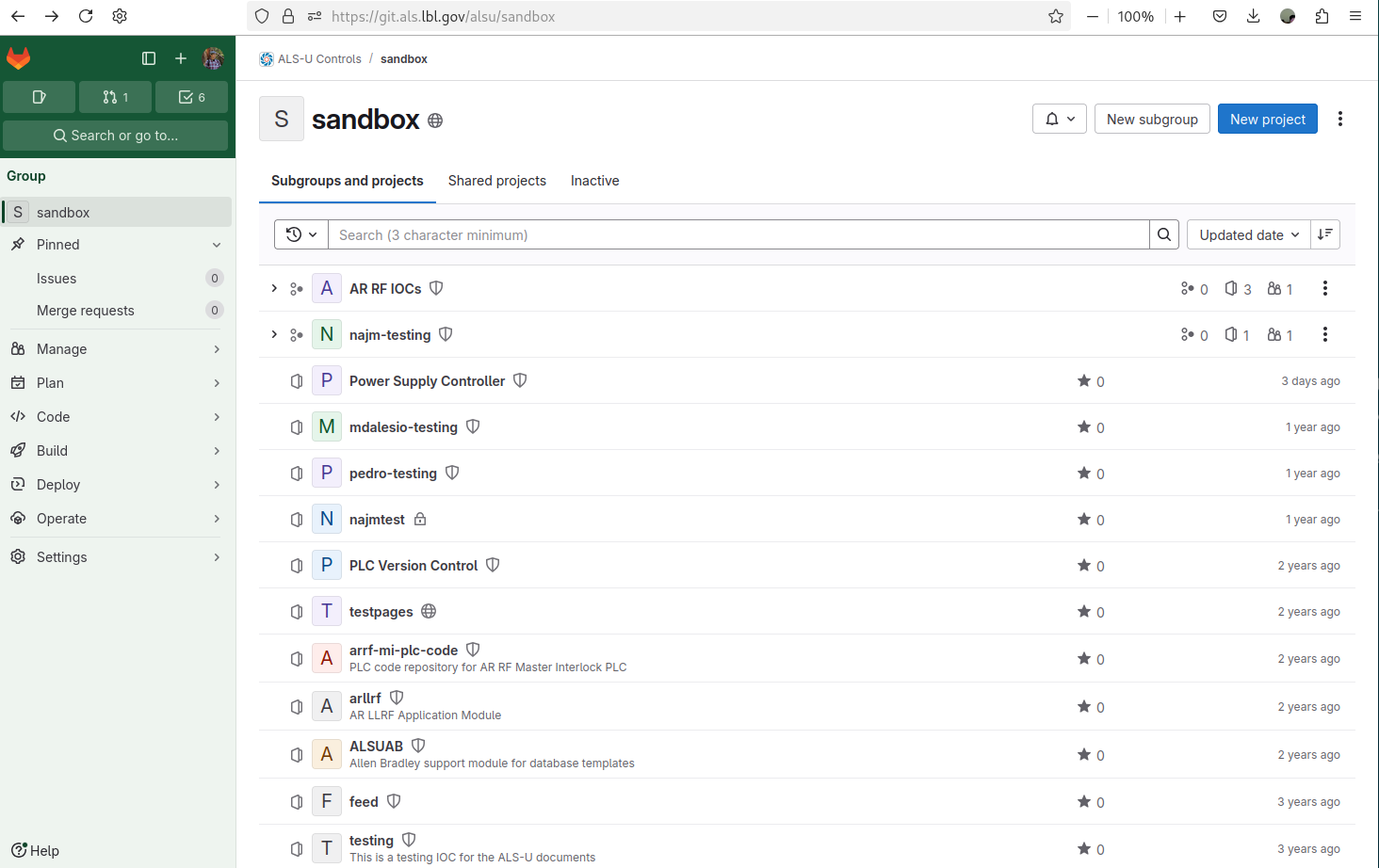

- Go to the ALS-U GitLab instance and navigate to a suitable group, like your personal sandbox area or a project group (e.g.,

alsu/sandbox). ClickNew project.

|

|---|

| Figure 1 ALS-U GitLab Sandbox |

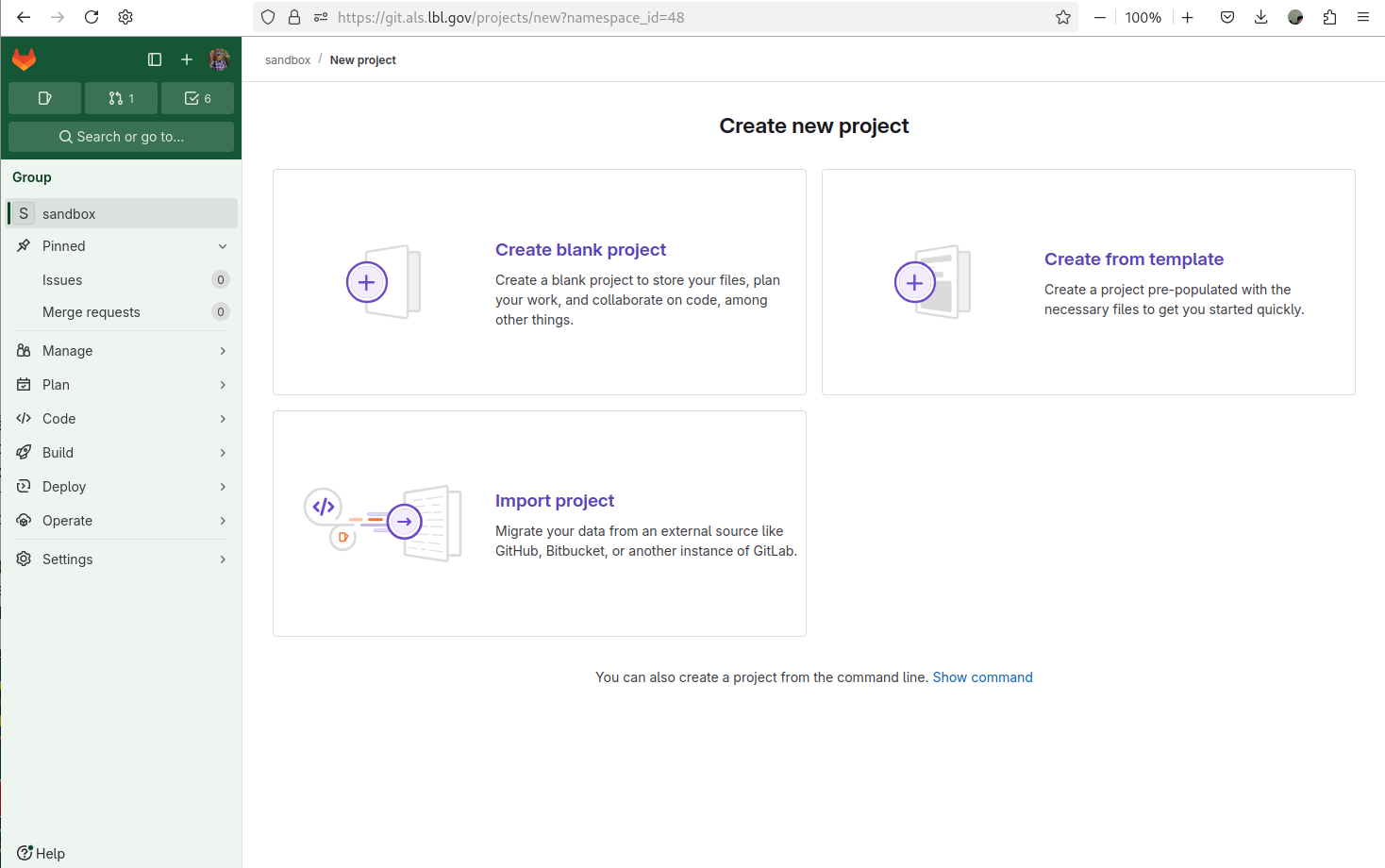

- Select

Create blank project

|

|---|

| Figure 2 ALS-U GitLab Sandbox - Create new project |

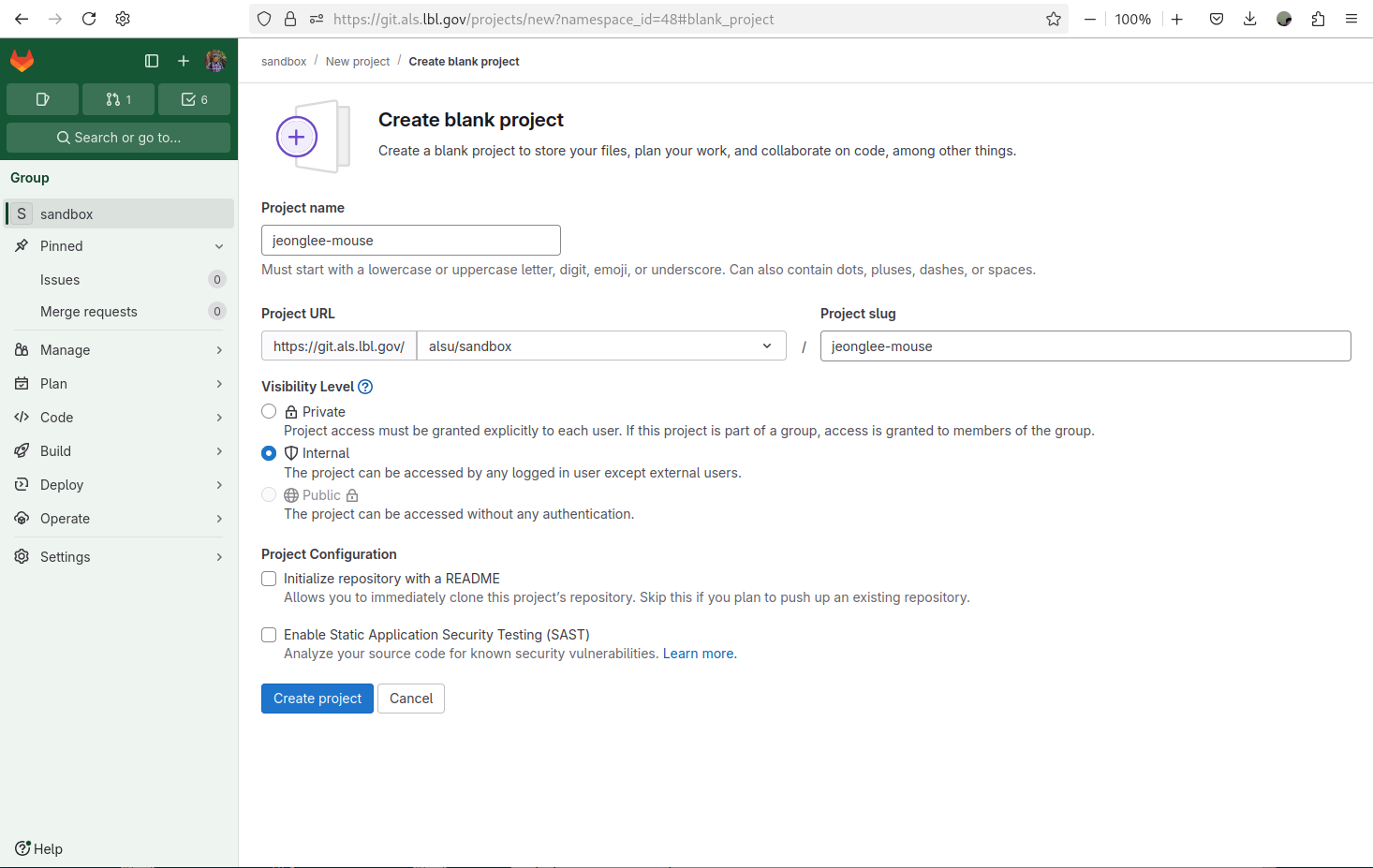

-

Define your own name and Hit the

Create projectProject name: your user accout +mouse, for exmaple,jeonglee-mouseVisibility Level: SelectInternalProject Configuration: UncheckInitialize repository with a README

|

|---|

| Figure 3 ALS-U GitLab Sandbox - Create blank project with default selections |

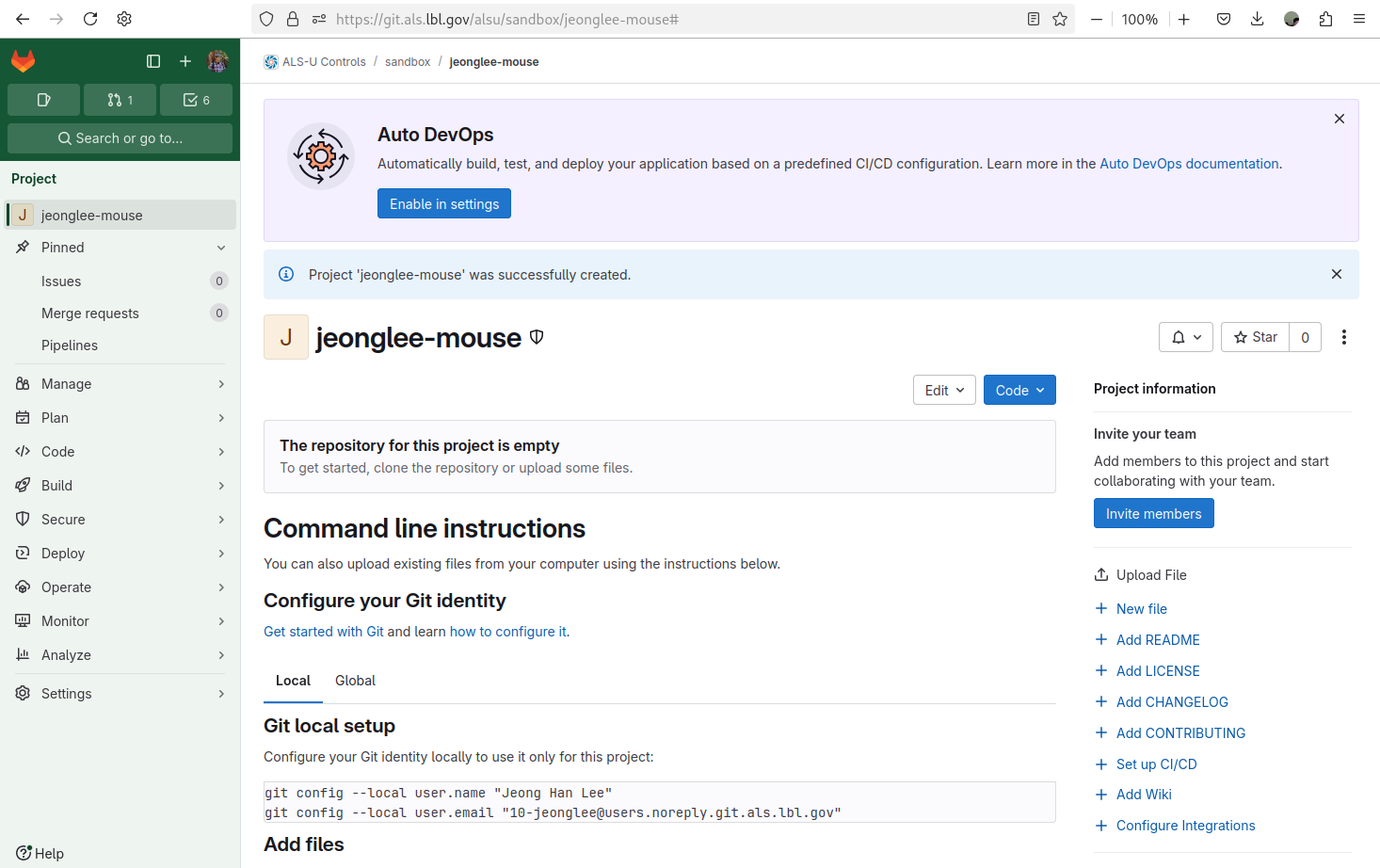

|

|---|

Figure 4 ALS-U GitLab Sandbox - Your gitlab repo jeonglee-mouse |

Push mouse to your repository

Now you are ready to push your code into the git repository you just create, for example, jeonglee-mouse.

Please go your created IOC folder, mouse and do the following commands

|

|---|

Figure 5 ALS-U GitLab Sandbox - Pushing the existing folder to your gitlab repo jeonglee-mouse |

mouse $ git remote add origin ssh://git@git-local.als.lbl.gov:8022/alsu/sandbox/jeonglee-mouse.git

mouse $ git add .

mouse $ git commit -m "Initial commit"

mouse $ git push --set-upstream origin master

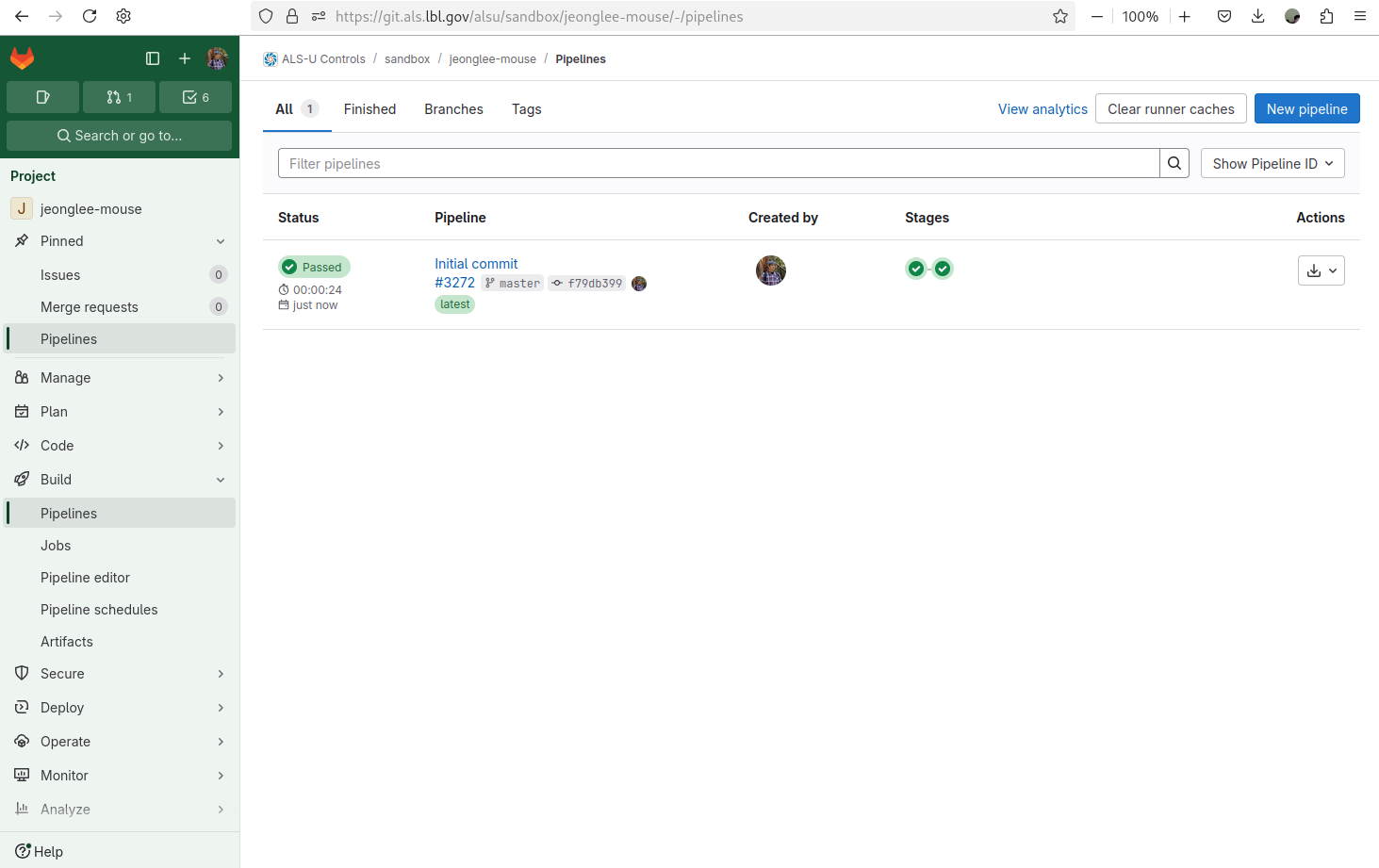

Check the CI Process

- Go to the gitlab web site, and Select

Pipelines

|

|---|

| Figure 6 ALS-U GitLab Sandbox - CI Pipelines |

- Select

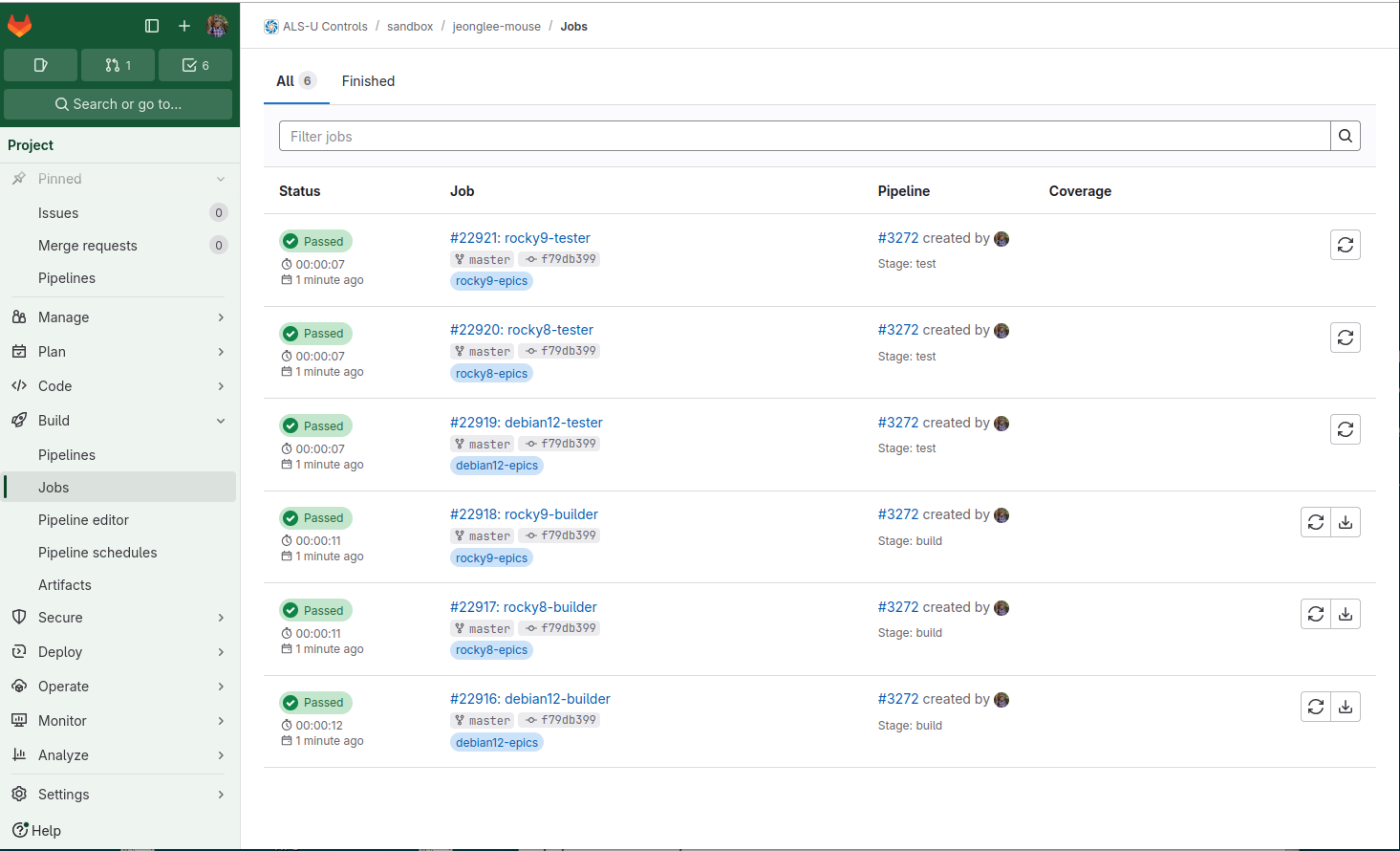

Jobs

|

|---|

| Figure 7 ALS-U GitLab Sandbox - CI Jobs |

- Select

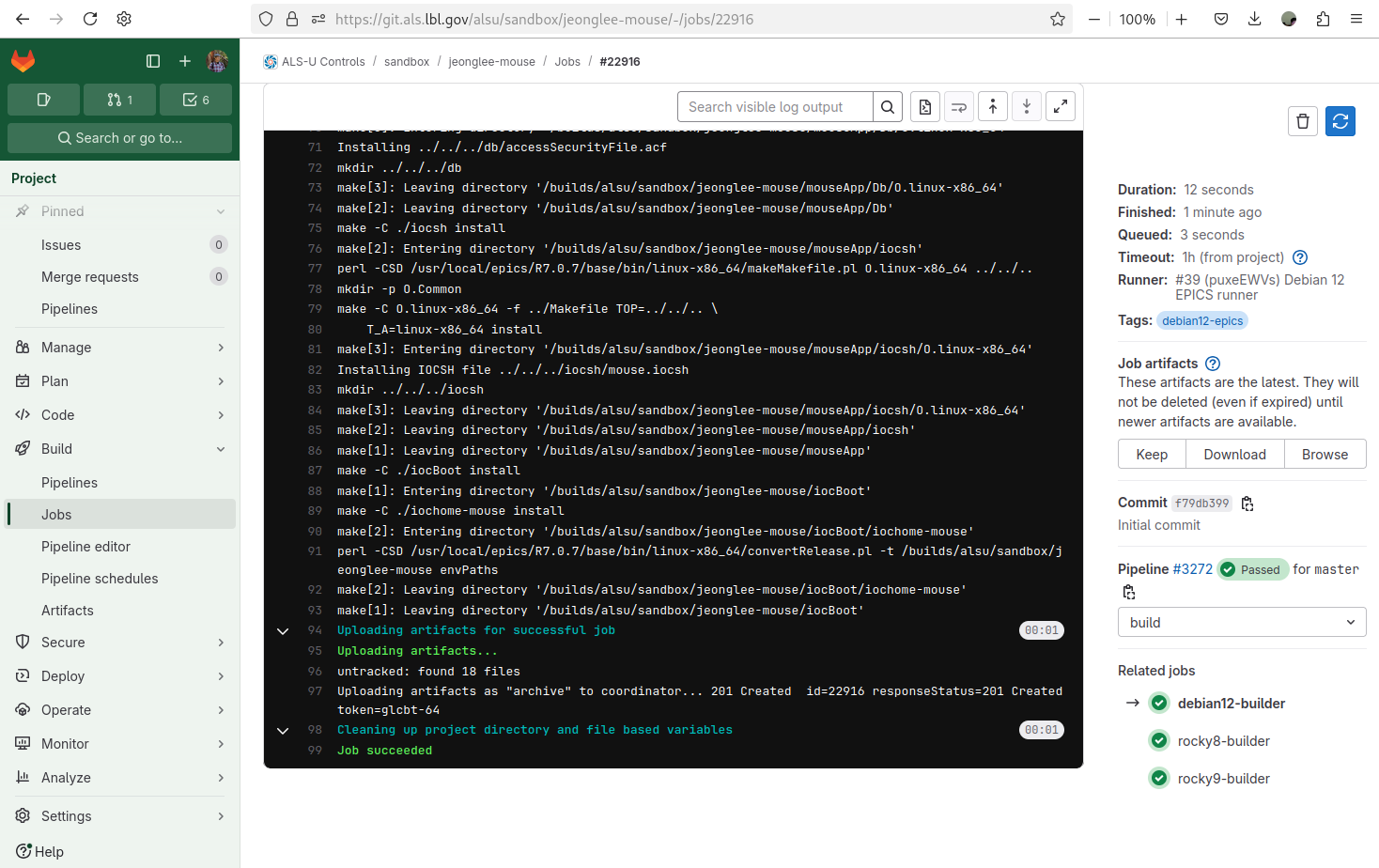

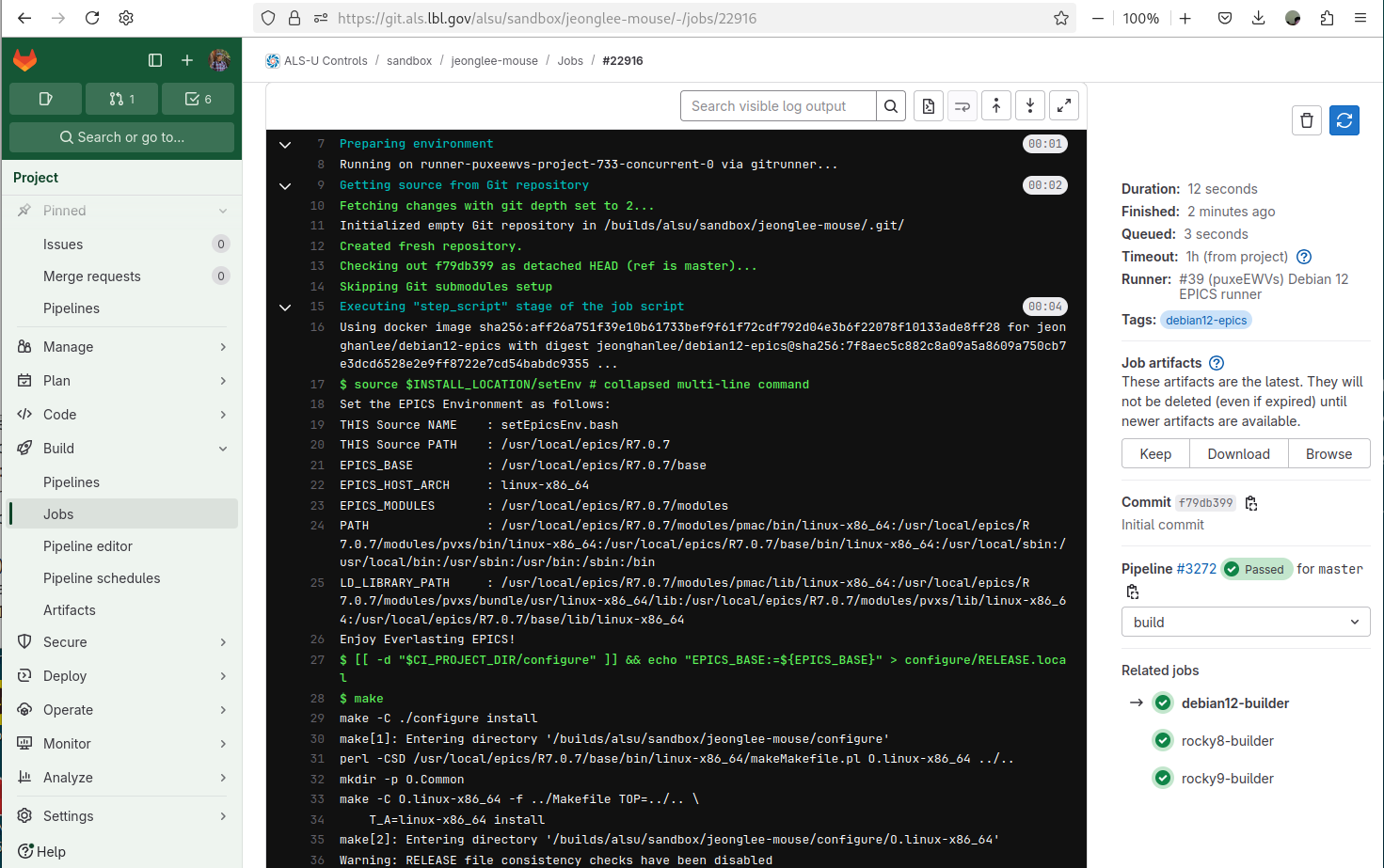

debian12-builderwithinJobs

|

|---|

| Figure 8 ALS-U GitLab Sandbox - debian12-builder |

Congratulations! Your IOC building is done sucessfully! Now before moving forward the next step, please scroll up debian12-builder screen to see the process of the beginning. Note that Enjoy Everlasting EPICS! line is the starting point.

|

|---|

Figure 9 ALS-U GitLab Sandbox - debian12-builder - no .sitemodule |

Add .sitemodules dependency

If your IOC requires the site specific modules, you must add .sitemodules file into the top of your IOC.

Please go your IOC, and add .sitemodules file.

mouse (master)$ echo ".sitemodules" > .sitemodules

mouse (master)$ git add .sitemodules

mouse (master)$ git commit -m "add .sitemodules"

mouse (master)$ git push

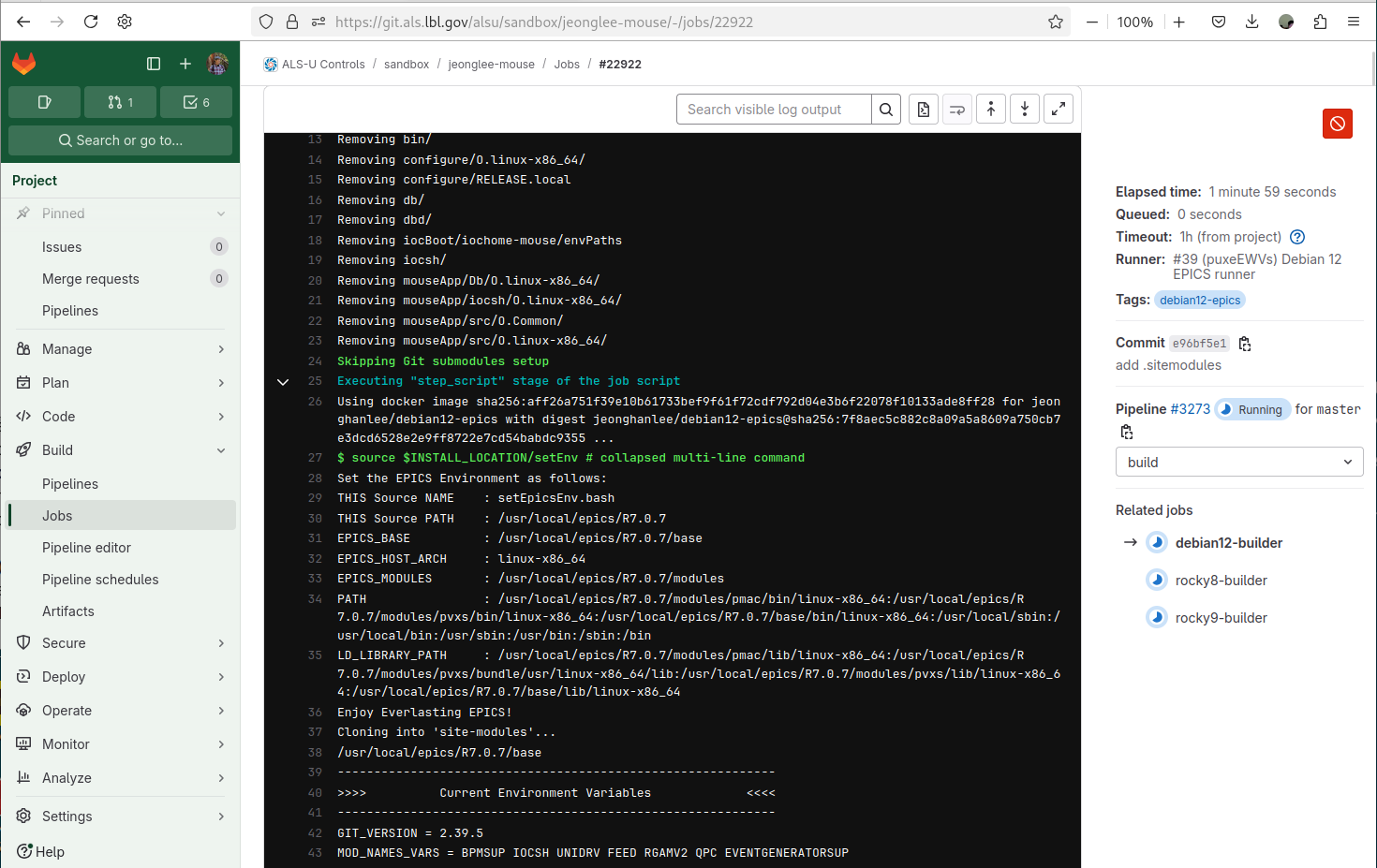

Check the CI Process

Please check line 38 where you can find Enjoy Everlasting EPICS!. After this line, you can see the new log for Cloning into 'site-modules'....

If you see that message, it indicates that you have configured the .sitemodules file correctly. Your entire CI process requires more time to compile these modules.

|

|---|

Figure 10 ALS-U GitLab Sandbox - debian12-builder - .sitemodule |

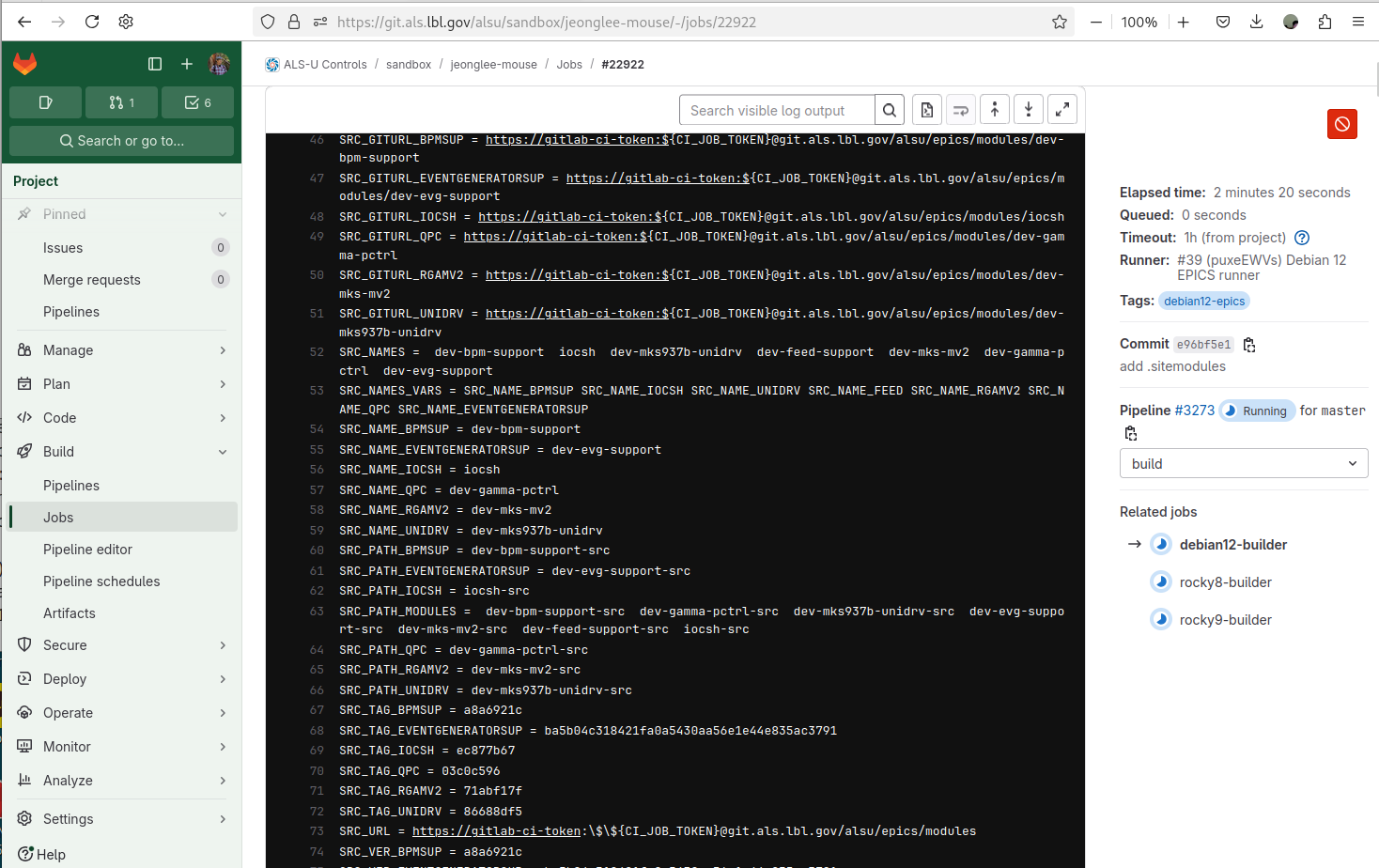

Please scroll down more, you can see detailed information about your sitemodules.

|

|---|

Figure 11 ALS-U GitLab Sandbox - debian12-builder - .sitemodule |

Chapter 3: Second EPICS IOC and Device Simulation

This chapter builds on the previous examples by guiding you through configuring a second EPICS IOC. A key focus is simulating device communication – specifically using a TCP-based simulator to mimic interactions often performed over serial interfaces. You will learn to set up this simulation and test the communication between the IOC and the simulator.

This chapter covers the following topics:

- Configure the Second IOC: Setting up and configuring a second IOC application, potentially introducing device support relevant for external communication.

- Create the TCP Simulator: Developing a simple TCP server application to simulate responses from a hardware device (like one communicating over serial).

- Test IOC-Simulator Communication: Testing the interaction between your second IOC and the device simulator.

3.1 Your Second ALS-U EPICS IOC

In the previous lesson, you created a basic IOC structure. Now, we’ll add functionality to communicate with external hardware, specifically a device connected via a serial communication (serial over TCP/IP). We’ll use two most popular EPICS modules:

- Asyn: Provides a generic, layered approach to hardware communication (serial, GPIB, IP, etc.). We’ll use it to manage the serial port itself.

- StreamDevice: Builds on Asyn to allow communication with text-based (ASCII) command/response devices using simple protocol files, avoiding the need to write custom C/C++ device support for many common cases.

We will later simulate the serial device using a TCP listening utility (like socat, or tcpsvd), allowing you to test the IOC without needing physical hardware that speaks TCP/IP.

Lesson Overview

In this lesson, you will learn to:

- Add and configure EPICS modules for device communication (e.g.,

Asyn,StreamDevice). - Define and implement device interaction logic using

StreamDeviceprotocol file (proto) and database records file (db). - Modify IOC build (

RELEASE,Makefile) and startup (st.cmd) configurations for the device communication - Build the IOC and examine its results.

Step 1: Generate the IOC Structure

First, ensure your EPICS environment is set up, then use the template generator script to create a new IOC structure. We’ll use jeonglee-Demo for the APPNAME and B46-182 for the LOCATION in this example. Replace jeonglee-Demo with your preferred name if desired.

# 1. Set up the EPICS environment

$ source ~/epics/1.1.1/debian-12/7.0.7/setEpicsEnv.bash

# 2. Run the generator script

# (Ensure you are NOT inside the previous 'mouse' or 'tools' directories)

$ bash tools/generate_ioc_structure.bash -l B46-182 -p jeonglee-Demo

# 3. Change into the newly created IOC directory

$ cd jeonglee-Demo

# 4. (Optional) View the top-level directory structure

jeonglee-Demo $ tree --charset=ascii -L 1

.

|-- configure

|-- docs

|-- iocBoot

|-- iocsh

|-- jeonglee-DemoApp

|-- Makefile

`-- README.md

# Note: bin, lib, db, dbd directories will be created after building.

Step 2: Configure Dependencies (configure/RELEASE)

We need to tell the build system that our IOC depends on Asyn, Calc, and StreamDevice. Edit the configure/RELEASE file using your preferred text editor. With the ALS-U EPICS Environment, StreamDevice was built with support for sCalcout record, so you need to add Calc module dependency in your IOC.

jeonglee-Demo $ nano configure/RELEASE # Or vi configure/RELEASE

Find the module definitions section and uncomment the lines for ASYN, CALC, and STREAM by removing the leading #:

# Snippet from configure/RELEASE

...

ASYN = $(MODULES)/asyn # <-- UNCOMMENTED

...

CALC = $(MODULES)/calc # <-- UNCOMMENTED

...

STREAM = $(MODULES)/StreamDevice # <-- UNCOMMENTED

...

Save and close the configure/RELEASE file.

Please note that there should be no trailing whitespace or additional characters after these variables. If there is anything, you may see the following error:

# Error Example for ASYN path with tailing whitespace

make: *** /home/jeonglee/epics/1.1.1/debian-12/7.0.7/base/../modules/asyn: Is a directory. Stop.

Step 3: Understanding Dependency Handling in jeonglee-DemoApp/src/Makefile

After defining module dependencies in configure/RELEASE (Step 2), the EPICS build system uses Makefiles like <APPNAME>App/src/Makefile to determine which components to include when building the final IOC application.

You can examine this file to see how standard dependencies, like the ones you just uncommented, are typically handled automatically by the template generator.

Let’s examine the jeonglee-DemoApp/src/Makefile:

jeonglee-Demo $ less jeonglee-DemoApp/src/Makefile

Inside this file, you will find standard conditional logic, often using ifneq blocks (meaning “if not equal” - essentially checking if the variable is defined/non-empty), similar to the snippet below:

# Snippet from jeonglee-DemoApp/src/Makefile showing standard conditional inclusion

PROD_IOC = jeonglee-Demo

# jeonglee-Demo.dbd will be created and installed

DBD += jeonglee-Demo.dbd

Common_DBDs += base.dbd

Common_DBDs += system.dbd # Specific additions may exist

# Add custom C/C++ source files here if needed

Common_SRCs +=

# --- Automatic inclusion based on configure/RELEASE ---

ifneq ($(ASYN),) # If ASYN was defined (uncommented) in RELEASE

Common_DBDs += asyn.dbd # include Asyn's dbd files

Common_DBDs += drvAsynIPPort.dbd

Common_DBDs += drvAsynSerialPort.dbd # Note: Both IP and Serial DBDs be included by default

Common_LIBs += asyn # and link against the Asyn library

endif

ifneq ($(CALC),) # If CALC was defined in RELEASE

Common_DBDs += calcSupport.dbd # include its dbd

Common_LIBs += calc # and link its library

endif

ifneq ($(STREAM),) # If STREAM was defined in RELEASE

Common_DBDs += stream.dbd # include its dbd

Common_LIBs += stream # and link its library

endif

...

Because of these standard ifneq blocks within Makefile, the necessary database definitions (Common_DBDs) and libraries (Common_LIBs) for modules like ASYN, CALC, and STREAM are included automatically by the build system when you uncomment them in configure/RELEASE.

Therefore, no manual editing of jeonglee-DemoApp/src/Makefile is required just to include these standard module dependencies configured in Step 2. You would typically only edit this file if you were adding your own C/C++ source code files to Common_SRCs or needed to link other non-standard libraries manually.

Step 4: Create StreamDevice and EPICS Database Files (jeonglee-DemoApp/Db)

Define the communication protocol and the EPICS records database:

- Create the Protocol File (

training.proto)

jeonglee-Demo $ nano jeonglee-DemoApp/Db/training.proto

Add the following content:

# Protocol definition for basic command/query

sendRawQuery {

ExtraInput = Ignore; # Standard setting for processing record output

out "%s"; # Format to send: output the string from the record's OUT field

in "%(\$1)40c"; # Format to read: read up to 40 chars (%40c) into the PV name passed as argument $1

}

Save and close the training.proto file.

- Create the EPICS Database File (

training.db)

jeonglee-Demo $ emacs jeonglee-DemoApp/Db/training.db

Add the following record definitions:

# Database file for StreamDevice TCP communication example

# Record to send the query string via StreamDevice

record(stringout, "$(P)$(R)Cmd")

{

field(DESC, "Raw Query") # Description of the record

field(SCAN, "Passive") # Record only processes when explicitly written to

field(DTYP, "stream") # Use StreamDevice device support

field( OUT, "@training.proto sendRawQuery($(P)$(R)Cmd-RB.VAL) $(PORT)")

# Specify protocol file, protocol name, target PV for reply ($1), and Asyn Port name

}

# Record to receive the reply string read by StreamDevice

record(stringin, "$(P)$(R)Cmd-RB")

{

field(DESC, "Raw Query Readback") # Description

field(SCAN, "Passive") # Value is written by StreamDevice, not by scanning

field(DTYP, "Soft Channel") # Standard software record type

}

Save and close the training.db file.

Step 5: Check the Makefile (jeonglee-DemoApp/Db/Makefile)

Now that you’ve placed your StreamDevice source files (training.proto, training.db) in the jeonglee-DemoApp/Db directory (Step 4), let’s look at how the build system includes them. Following EPICS conventions, the Makefile located within this same directory (jeonglee-DemoApp/Db/Makefile) is usually responsible for handling these types of files.

Often, this Db/Makefile is set up to automatically find and include any database (.db) and protocol (.proto) files placed within the jeonglee-DemoApp/Db directory. This means you usually don’t need to manually edit this Makefile every time you add a new .db or .proto file. We will cover the different scenario in a more advanced topic for a separate lesson.

Furthermore, the build process takes these source files found via the Db/Makefile and installs them into the standard runtime database directory. By default, for development builds, this location is $(TOP)/db, where $(TOP) refers to the top-level directory of your IOC source code. (The st.cmd script in Step 6 correctly uses a path relative to this runtime $(TOP)).

Important Note on Installation Paths: Be aware that for production deployments, the final installation location of the IOC (including its db directory) can be controlled by setting the INSTALL_LOCATION variable, done in the configure/CONFIG_SITE file. If INSTALL_LOCATION is used during the build, the runtime TOP directory (where the IOC executable runs from and finds its db folder) may be different from your source TOP directory. How to manage INSTALL_LOCATION is a more advanced topic for a separate lesson, but it’s useful to know that the runtime path isn’t always the same as the source path, although it defaults to that for simple builds.

Let’s examine the jeonglee-DemoApp/Db/Makefile:

jeonglee-Demo $ less jeonglee-DemoApp/Db/Makefile # Use less or your editor

Inside, you might find rules similar to the following (confirmed accurate for the ALS-U template), which use functions like wildcard and patsubst to achieve the automatic inclusion:

# Snippet from jeonglee-DemoApp/Db/Makefile showing automatic inclusion mechanism

# (This specific syntax uses wildcard/patsubst to find files in parent dir and adjust path)

...

# Example mechanism (details may vary but result is automatic inclusion):

DB += $(patsubst ../%, %, $(wildcard ../*.db))

DB += $(patsubst ../%, %, $(wildcard ../*.proto))

...

Because the template’s Db/Makefile is designed to automatically find .db and .proto files in the jeonglee-DemoApp/Db directory, no changes are needed in this Makefile for the training.db and training.proto files you created in Step 4. The build system automatically incorporates these files into the build process and handles their installation (by default to $(TOP)/db). This automation significantly simplifies the development workflow, especially for developers newer to EPICS or when creating less complex IOCs.

Step 6: Configure Startup Script (iocBoot/iocB46-182-jeonglee-Demo/st.cmd)

Now, we need to configure the IOC’s startup script (st.cmd). This script runs when the IOC starts and is responsible for setting up communication, loading database records, and initializing the system. We will modify it to:

- Define the macros (